In the future, you might shake your camera for sharper images – yes, really! Here's why…

Scientists say that with specialized software, you can extract even crisper detail by creating motion blur on purpose – turning blur into a tool for high-resolution photography

Camera shake – it's the one thing we all try to avoid. And yet, by doing it, we can get sharper, high-resolution images? It sounds like photography heresy. But new research from Brown University says it might just be the next big leap in image quality.

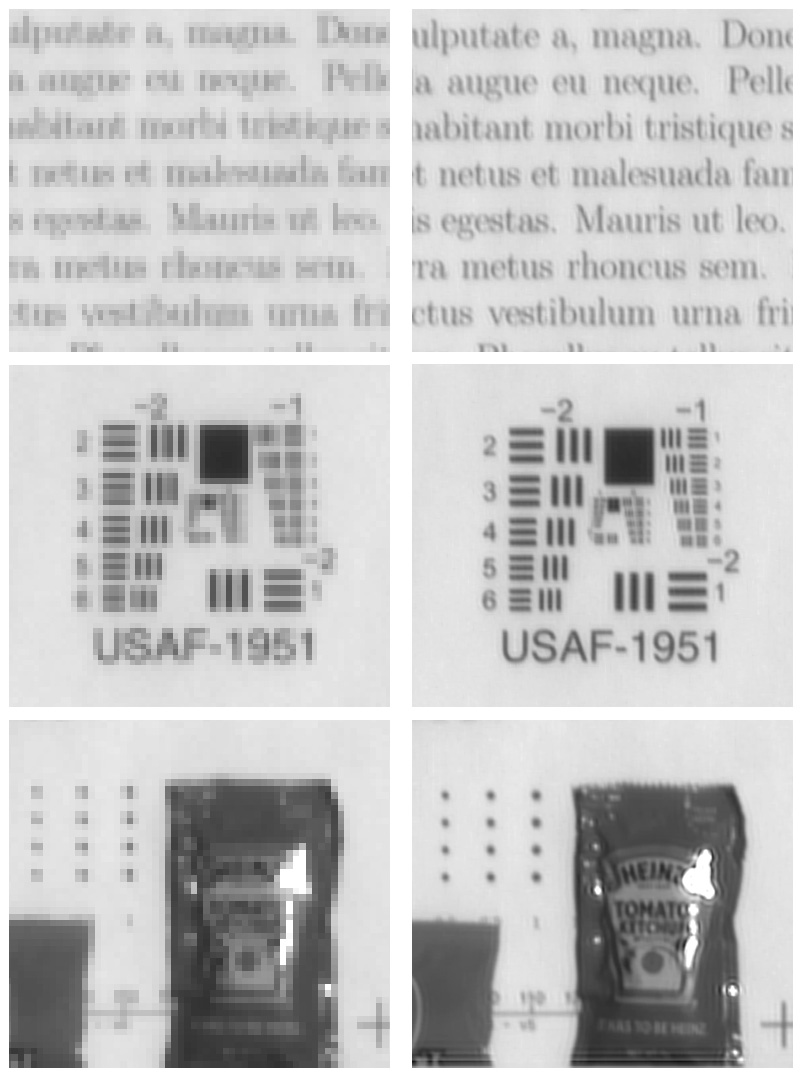

A team of engineers at the Providence, Rhode Island university has developed an algorithm that turns motion blur into a resolution booster – enabling cameras to capture more detail than the sensor's native resolution would normally allow.

“What we show is that an image captured by a moving camera actually contains additional information that we can use to increase image resolution,” said Pedro Felzenszwalb, a professor of engineering and computer science at Brown.

The study was recently presented at the International Conference on Computational Photography and is posted on arXiv.

As a photographer, this instantly got me thinking: what if we could actually create sharper images than our gear typically allows – without upgrading the sensor? A sort of resolution u[grade through software alone. Imagine capturing gigapixel-level detail using the camera you already own – whether it's a mirrorless, DSLR, compact camera or even a smartphone.

This flips everything we know. Motion blur, instead of being the enemy, becomes an asset. Traditional techniques like focus stacking – which require a tripod, time and precise editing – could become obsolete for certain applications.

So how does it actually work?

We know that camera sensors record light as pixels. Each pixel averages the light it receives over a small area. That means anything smaller than a pixel usually gets blurred or lost – that's the limitation of your sensor's resolution.

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

But here's where it gets interesting: when the camera moves during exposure, the light from fine details moves across multiple pixels, creating blur trails.

Instead of discarding this blur, the new algorithm developed by Brown University uses it. These light trails provide extra spatial information, almost like viewing your subject from multiple tiny perspectives during exposure. The software then reconstructs the fine detail by analyzing how those points moved – essentially letting the camera "see between the pixels."

Think of it as a smarter version of bracketing or focus stacking, but done in a single exposure using motion, then reconstructed via software. The result is a sharper, higher-resolution image than your sensor alone could ever provide.

Who actually needs this?

Sure, for everyday photography, this might seem like overkill. But in specialized genres – like medical photography, art documentation or archival imaging – where capturing ultra-fine detail matters – this could be revolutionary.

It also has big implications for mobile photography, where sensors are small and physical resolution is limited, or aerial imaging, where motion is often unavoidable.

Now the Brown University team is looking for industry partners and plans to refine the technology for public use in the coming years. This could be a very exciting time!

You might like...

Browse the highest resolution cameras you can buy today, and the best 8K and 6K cameras.

Kim is a photographer, editor and writer with work published internationally. She holds a Master's degree in Photography and Media and was formerly Technique Editor at Digital Photographer, focusing on the art and science of photography. Blending technical expertise with visual insight, Kim explores photography's time-honored yet ever-evolving role in culture. Through her features, tutorials, and gear reviews, she aims to encourage readers to explore the medium more deeply and embrace its full creative potential.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.