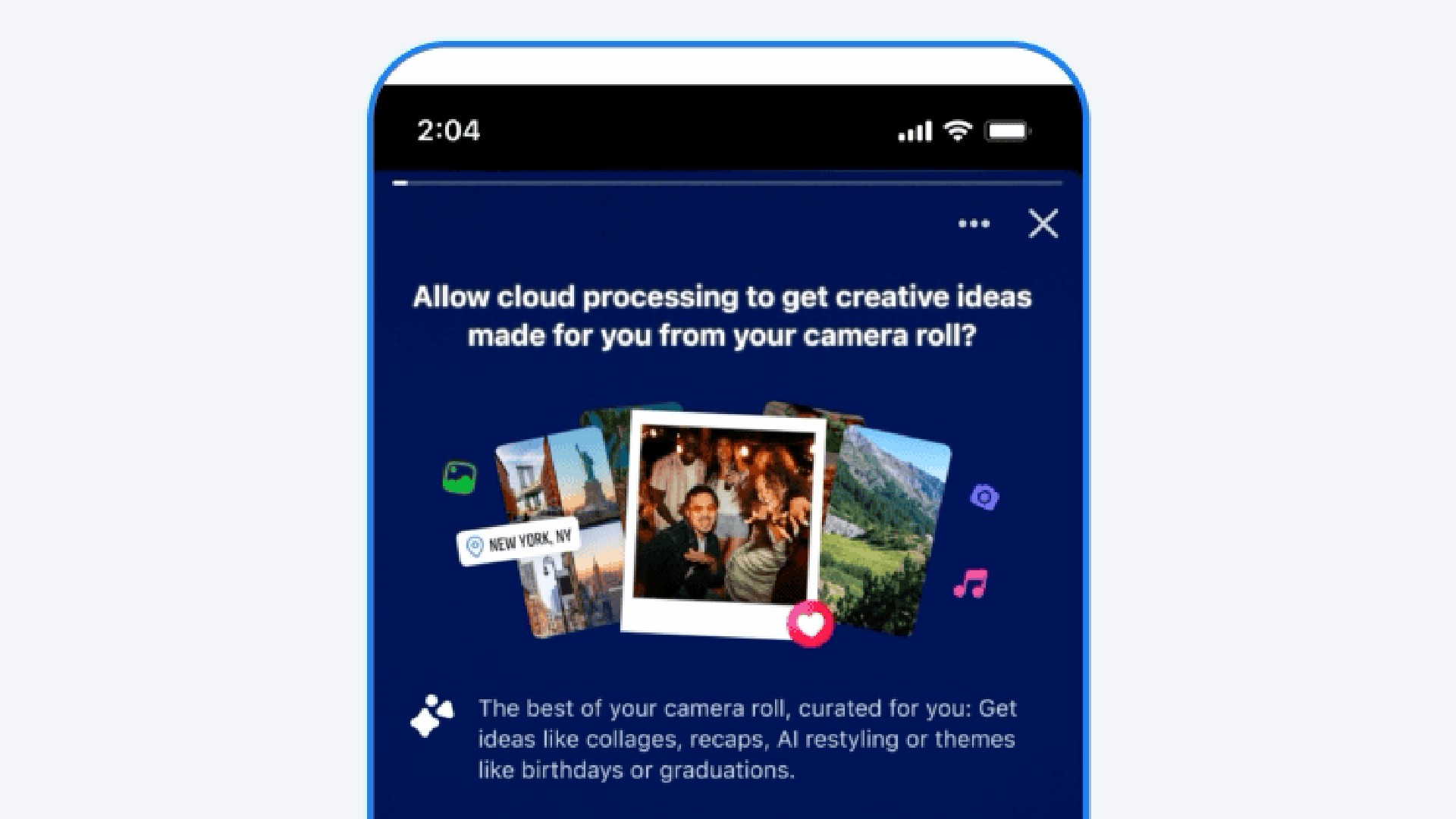

Finding it difficult to track down an image you uploaded to Facebook? Well, here's what might sound like good news. The social giant has introduced a new opt-in feature that will scan your phone’s camera roll, suggest edits and collages, and help you share “hidden gems” you may have missed.

It’s being pitched as an effortless way to rediscover forgotten moments and make them “shareworthy”. The company says it’s rolling out now in the US and Canada, with plans to expand soon. Facebook adds that all suggestions are private, and nothing is shared unless you choose to post it.

But, as always, there’s a caveat. And it’s one that photographers, videographers, and anyone else protective of their images should pay attention to.

AI training

Around the world, photographers, filmmakers and other creatives are in uproar at the moment, over AI companies training their models on their images. So what's Facebook's policy here? Well, it insists it doesn’t use your camera roll to train its AI... unless you edit the suggested content with Meta’s tools, or share it publicly. In other words, the minute you take advantage of what the feature is designed to do, you’ve effectively handed over permission for Meta to use that material to “improve" its AI model.

That’s a significant trade-off, especially for professionals whose camera rolls are likely to contain raw files, client work or unreleased projects. While Meta says these images are uploaded privately to its cloud, the idea of giving a social media giant ongoing access to your camera roll, even on an opt-in basis, isn't something many professionals will feel comfortable with.

After all, this isn’t just about privacy; it’s also about ownership. Once your image passes through Meta’s systems, how confident can you be that it won’t end up influencing future AI models, even indirectly? The company’s privacy statement is careful with its words, but far less reassuring in spirit.

Where's the upside?

So that's the downside; what's the upside here, exactly? What problem is Facebook trying to solve?

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

According to Meta, it's that people have stopped sharing personal photos because they don’t feel their snaps are “special” enough. The new feature, the company says, can take care of that; by enhancing images, building collages and crafting little highlight reels so you can reconnect with friends and family.

But I think that's all nonsense. People haven’t stopped sharing because their photos aren’t good enough. They’ve stopped because they don’t trust the platform anymore. Between data scandals, algorithmic manipulation, and a general sense that Facebook has become more ad network than community, many users have simply opted out.

Facebook’s answer to all that is... more AI? To me, that sounds like someone breaking your camera, then offering to lend you a better one, as long as they get to keep the negatives. So I'm going to avoid opting in to this new feature, and I suggest you do, too.

Tom May is a freelance writer and editor specializing in art, photography, design and travel. He has been editor of Professional Photography magazine, associate editor at Creative Bloq, and deputy editor at net magazine. He has also worked for a wide range of mainstream titles including The Sun, Radio Times, NME, T3, Heat, Company and Bella.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.