This NASA-made camera photographs the invisible – and the tech is based on an accessory photographers already use

Researchers at NASA have created a simpler camera system for visualizing wind – and it won an award

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

You are now subscribed

Your newsletter sign-up was successful

Imagine being given the task of shooting a video of something invisible: Wind. The challenge is both common and integral enough that NASA tasked itself with building a camera up to the task – and it works using polarization.

The Self-Aligned Focusing Schlieren, or SAFS for short, is a camera that can see air movement, an essential tool that helps scientists and engineers look at the airflow around plans, rockets, and other vehicles.

Research into the SAFS dates back to 2020 from NASA’s Langley Research Center, but NASA has recently shared a glimpse at what the tech looks like, now that the technology has won several awards, including the 2025 NASA Government of the Year. Why did a camera earn the highest award that NASA honors new tech with? The system allows engineers to study aerodynamics with a much simpler, low-cost setup than earlier methods.

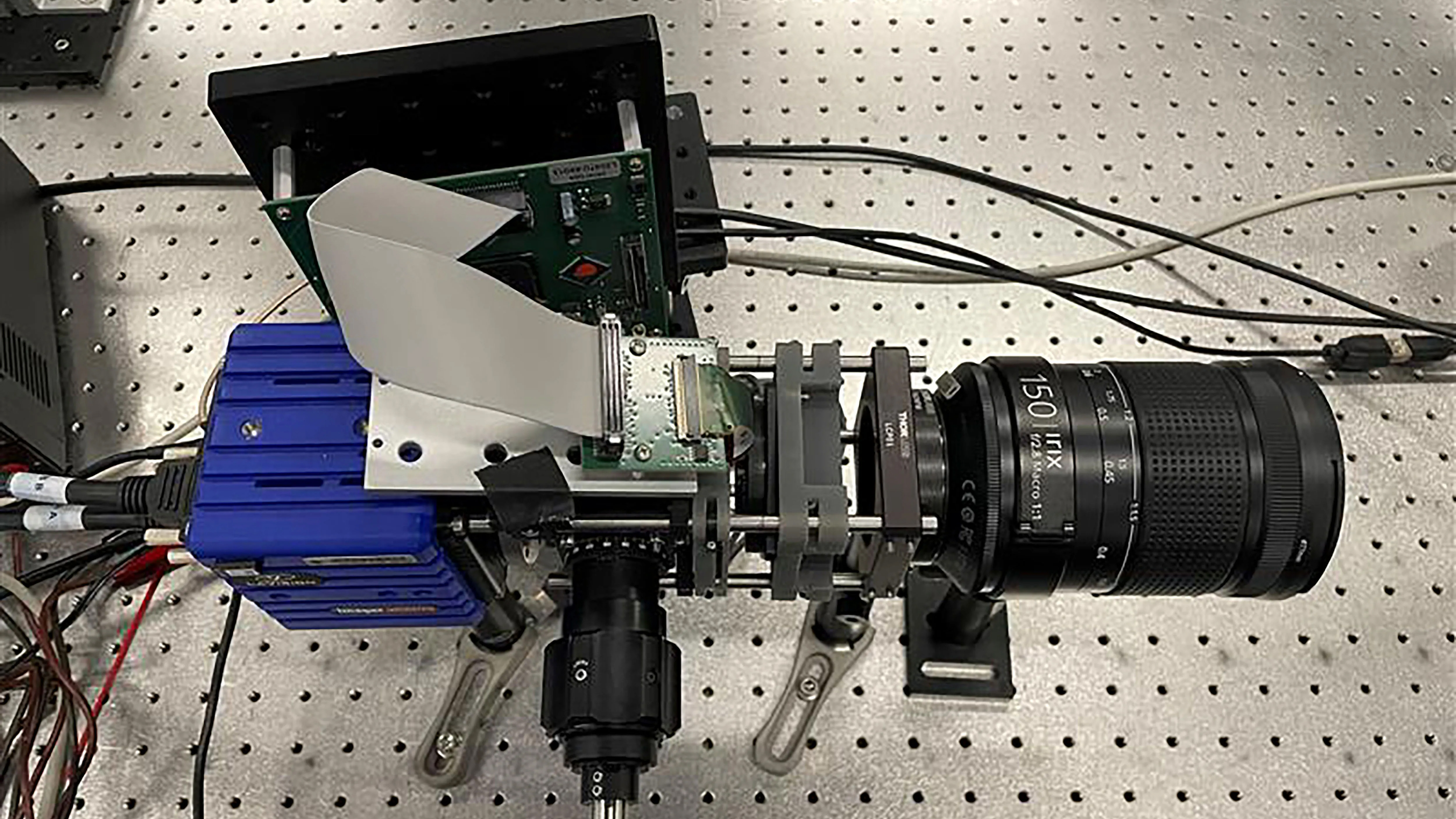

The key to the SAFS success is actually related to something already in many photographers' bags: a polarizing filter. The system is, of course, a bit more complex than twisting a polarizer filter onto a camera lens, but the camera is actually built using a “commercial-off-the-shelf camera” and mounted much like an oversized lens.

Earlier methods of visualizing wind used two precisely aligned screens and a special camera that could see air movement by detecting changes in the density. But, the earlier focused schlieren imaging demanded a level of precision that meant setup was a multi-day process that could be disrupted by an accidental bump.

The SAFS instead uses a single grid with polarization. Polarizers filter light that’s coming from specific directions, and integrating that concept has helped the camera to work without a secondary screen, condensing the setup time from weeks to minutes.

As a NASA patent explains, light passes through a condenser lens and a linear polarizer towards a beam splitter. The light passes through the single grid around the object being tested. A reflective background mirrors the light, which means the light is then passing the object a second time. The light then passes through the grid a second time before being captured by the camera.

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

If that sounds complicated, imagine how complex the previous system was. As NASA described the earlier method: “It’s the equivalent of lining up two window screens on opposite sides of a room so their patterns match exactly."

Now, NASA uses the technology to improve predictions on takeoff and landings for new aircraft, but, the system has been adopted beyond NASA, including in more than eight different countries.

“When researchers can see and understand air movement in ways that were previously difficult to achieve, it leads to better aircraft designs and safer flights for everyone,” said Brett Bathel, the co-inventor of the SAFS alongside Joshua Weisberger and a member of the team at NASA’s Langley Research Center.

You may also like

Find more space inspiration with the best lenses for astrophotography or the best cameras for astrophotography.

With more than a decade of experience writing about cameras and technology, Hillary K. Grigonis leads the US coverage for Digital Camera World. Her work has appeared in Business Insider, Digital Trends, Pocket-lint, Rangefinder, The Phoblographer, and more. Her wedding and portrait photography favors a journalistic style. She’s a former Nikon shooter and a current Fujifilm user, but has tested a wide range of cameras and lenses across multiple brands. Hillary is also a licensed drone pilot.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.