What's next for Sony cameras? Glimpse the technology of the future

Watch sports in AR, fix your photos, and fly around the world from your house, check out all the latest tech from Sony

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

You are now subscribed

Your newsletter sign-up was successful

Sony has just wrapped up its 50th-anniversary celebration, a chance to show off all the incredible advancements in technology Sony is currently working on behind closed doors.

Usually, the media are not allowed a peek into this R&D world, but this year Sony decided to trickle out a few exciting glimpses into projects and concepts that will be making their way into products in the future.

• Sony shows us an AI future, but where does that leave creators?

Using AI to perfect digital photos and video

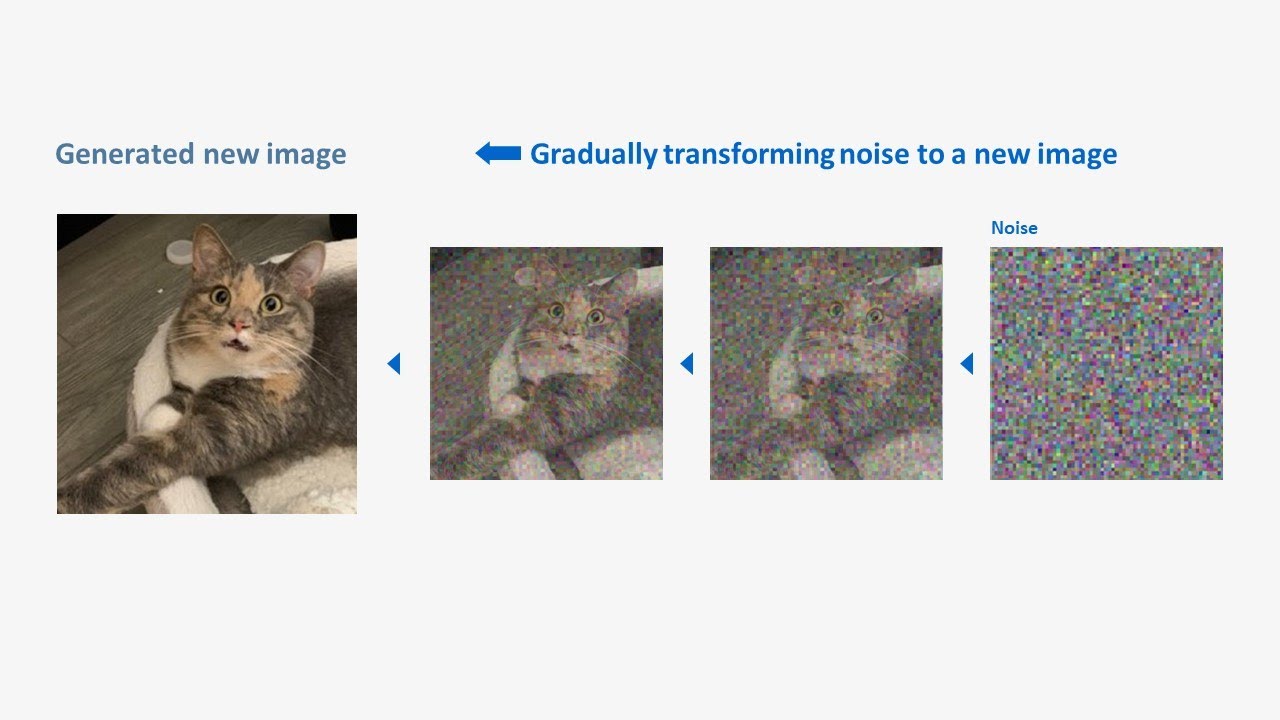

Using artificial intelligence and deep learning models, Sony hopes to be able to reduce digital imperfections in images and audio including noise and blur. This is Sony's take on a technology that we have started to see pop up a lot with the latest camera phones.

Using algorithms trained by processing thousands of images, videos, and audio recordings, Sony's Deep Generative Models (DGMs) can dramatically reduce digital imperfections in an image by working out what the image is of and filling in the missing information using machine learning. This technology is already in use to improve noise reduction in the excellent range of Sony noise-canceling headphones.

Both Apple and Google have already made huge strides in this area. Apple has its Deep Fusion technology that works on pulling details out of the shadows and highlights without adding noise or artifacts. Google’s latest Pixel 7 phones come with a raft of new AI features including its very good night sight and blur reduction technologies.

As the world’s biggest sensor manufacturer, it is great to see Sony bringing this technology to anyone using its sensors, so we should see a lot of smartphones capable of these image correction tricks in the near future.

New Time of Flight sensors can enable brand new subject tracking opportunities

Sony has made a new time of flight (ToF) sensor and software kit for developers using their imaging sensors to utilize for AR (augmented reality) purposes. ToF sensors are exciting tech that uses a laser system to track movements in real time.

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

In Sony’s examples, it was using the system to track a model's hands in exceptional detail as they moved. The tracking can detect incredibly subtle movements and can also ensure that movements are kept smooth. The tracking is also completely unaffected by color. The new ToF AR kit can also be used with AI and machine learning to make predictions and estimates on finger movements.

This also has exciting potential for camera tracking and focusing systems in the future, with the iPhone already pushing hard with ToF features using its LiDAR sensor, it could only be a matter of time before we start seeing a lot more of this kind of system make its way into camera phones and other mirrorless camera devices.

Changing the way we watch sports with Hawkvision

Many tennis fans will be familiar with Hawkeye, which for years has been used to make line calls. It has also recently been deployed at the FIFA World Cup 2022 in the latest iteration of the controversial VAR system.

Sony showed off the huge improvements to the Hawkeye system. The most impressive aspect is the incredible Skeletrak system, which utilizes cameras all around the stadium to track the players all the way down to the movements of their skeletons. Not only beneficial for making sporting decisions like offside, but the potential for the technology is also huge. The Hawkvision system can use the data gathered, alongside AI and machine learning from player stats, tactics, and historic data, to make predictions on player movements.

Now, this is where it gets really interesting. Using Hawkvision also allows a 3D model to be made of the entire field and players, so the game can be followed along in augmented or virtual reality. In theory letting football fans choose their own camera angles, even from pitch level. A system that could fundamentally alter the way we consume sports.

Create realistic cityscape images without leaving the house

Using terrain, satellite, aerial imagery, sun positioning, and real-time weather reports, Sony's Mapray tech allows a user to create a realistic rendering of a real-life place. Utilizing all this public data ensures that the rendered 3D image is as accurate as possible.

Useful for many commercial purposes such as city planning or architecture, this will also have an impact on the entertainment industry with movies and images no longer needing to be shot on location if perfect realistic locations can be conjured up instead. This will also have huge benefits to those creating content for the rapidly expanding metaverse. City and landscape photographers might now be able to photograph distant cities across the globe without having to leave their own homes.

The tech showed off my Sony is still very digital looking, reminding me of Microsoft Flight Simulator, but as the resolution for graphics changes and AI creates more realistic interpretations we will start to see these cities and places like never before.

Change how you create with real time audience emotional feedback with VX

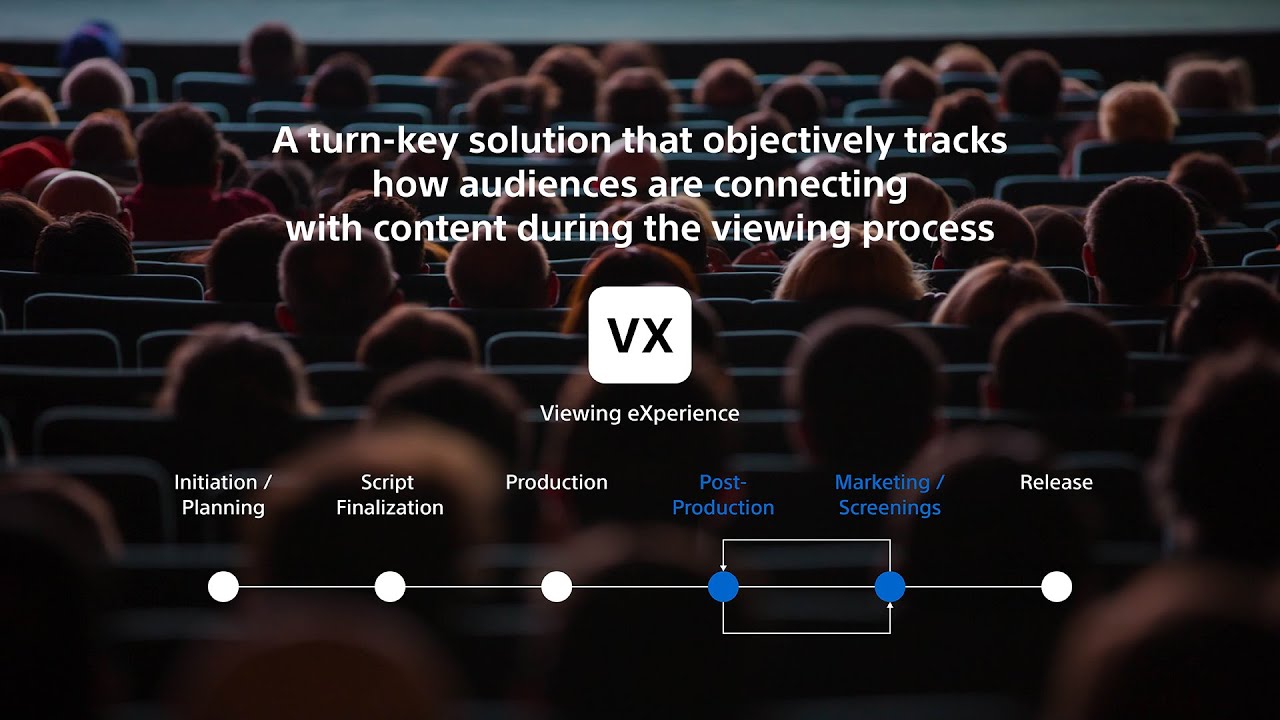

We are used to creating work and then hoping for the best reaction upon release, but with the Viewer Experience (VX) AI learning system from Sony, content creators can get detailed emotional feedback from users during production.

Using a system of video cameras that monitor and interpret facial expressions, heart rate sensors, and audio cues such as audience gasps or clapping, the VX system can create a real-time graph of exactly what emotional response your content inspires in its audience.

The end goal of this tech is to allow creators time to rework their projects in response to honest audience reactions in an effort to have the final version of the project as successful as possible. Whilst we have seen test screenings for decades, this is a real attempt to use AI to dictate how content is shaped and formed.

You might also like the best Sony lenses. For more on the topic of AI, check out: What is an AI camera? How is AI changing photography

Gareth is a photographer based in London, working as a freelance photographer and videographer for the past several years, having the privilege to shoot for some household names. With work focusing on fashion, portrait and lifestyle content creation, he has developed a range of skills covering everything from editorial shoots to social media videos. Outside of work, he has a personal passion for travel and nature photography, with a devotion to sustainability and environmental causes.