You probably missed some major editing news this week. Here's a recap of everything announced for Photoshop, Lightroom, Premiere Pro, Firefly, and more at Adobe Max

Adobe Max 2025 has brought some key changes to Lightroom, Photoshop, Premiere Pro, Firefly, and more

Adobe's annual creativity conference has brought some of the biggest software updates of 2025 across programs like Photoshop, Lightroom, Premiere Pro, and Firefly.

Held on October 28-30, Adobe Max brought several key updates to the brand's photo and video editors, along with sneak peeks of tech the software giant is working on behind the scenes. But with such a major event, there's a lot of news to sift through, from AI culling in Lightroom, the ability to customize Firefly models with your own photos, agentic editing in Photoshop, Photoshop 2026, and more.

And that doesn't even include the Sneaks, the look at upcoming tech being worked on behind the scenes that could make its way to future editions of programs like Photoshop and Premiere Pro.

I just returned from Los Angeles, where I watched Adobe's biggest announcements of the year live on stage. In case you missed it, here's a recap of the key photo and video announcements at Adobe Max, posted as they happened.

Watch the Adobe Max keynote replay

Watch Adobe Max Sneaks replay

Shantanu Narayen, Adobe CEO, is opening the keynote with a statement on AI and "creativity as a universal language."

"Our vision for Adobe Firefly is to make it your one stop destination for creative workflows," he says.

Here's an interesting statistic: Two out of every three creators using the beta version of Photoshop use generative AI in their workflow every day.

David Wadhwani, President, Adobe Digital Media Business, says there is now five times more demand for content and that 77 percent of creative and marketing teams are hiring.

This is coming at the age of AI. And to meet creators at this intersection, Wadhwani says Adobe is focusing on three things: Continuing to deliver Adobe's own Firefly models, integrating partner models from third-party platforms, and allowing creators to customize and create their own models.

The first two aren't a surprise, as Firefly has been around for a while and Adobe has already integrated models like Nano Banana, but Adobe just announced the ability to customize your own AI models this morning, which is launching in private beta to Firefly. This allows users to feed the AI their own images to get results that are more tailored to their specific style.

Adobe has announced a deep integration of Google models into CC and Firefly apps.

Eli Collins, VP of Google Deep Mind, says Nano Banana has generated over 5 million images since launching two months ago.

Adobe is introducing Custom Models, which are personalized versions of Firefly trained on your assets. Announced earlier today, Adobe is now demoing just what that looks like.

Users drop in reference images of their own and load it into a custom model. The AI then scans, tags, and generates a caption based on the content to understand the style. You need at least 10 images.

Then, in Firefly, creators can choose that custom model from the drop-down menu. Creators can also have multiple styles and multiple models for each.

Those assets can then be opened in Photoshop to work on additional details and customize what the AI generated over in the Firefly app, including mixing multiple elements into a collage.

Adobe says the private beta will be available in the coming days.

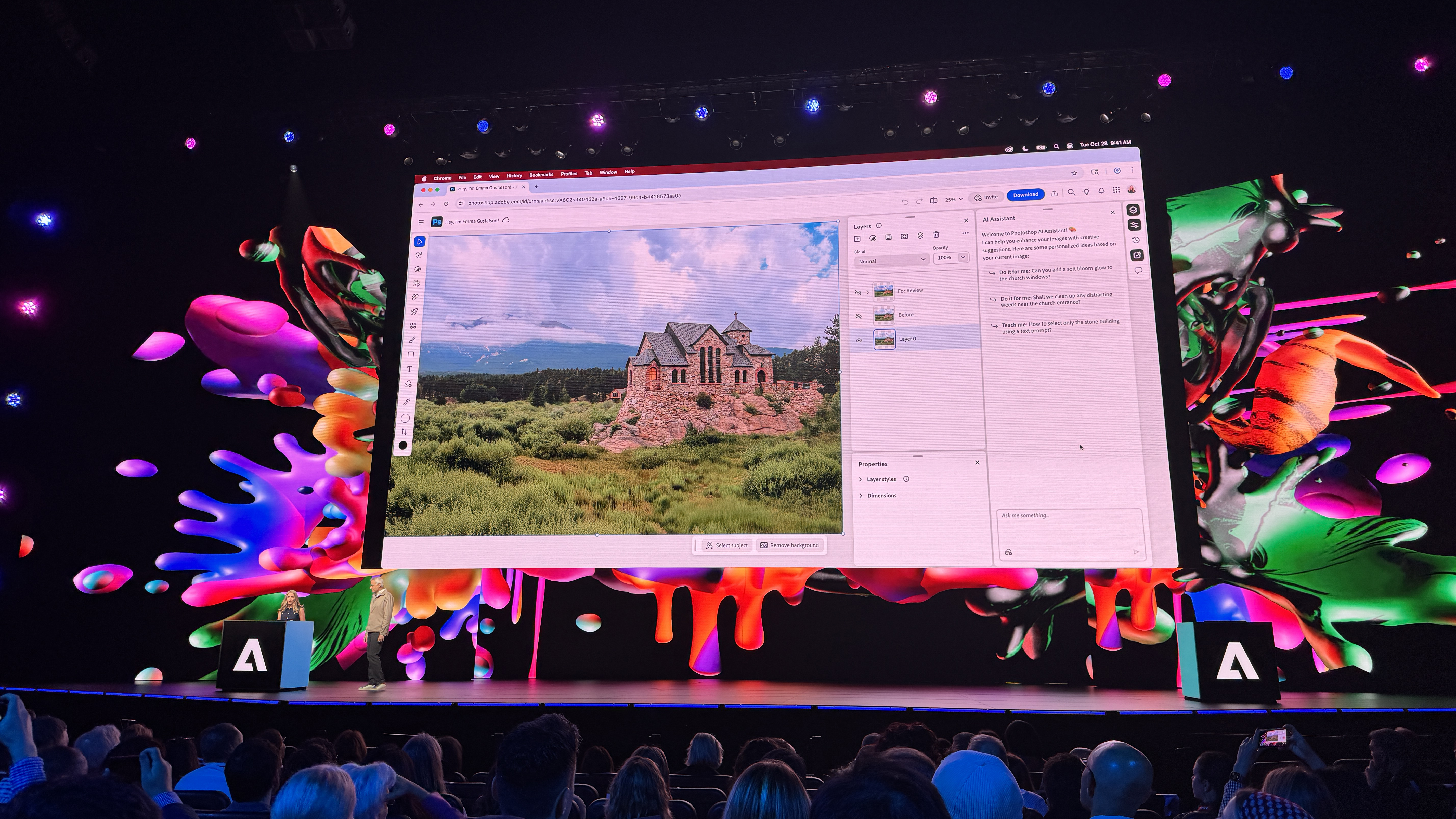

Agentic AI is coming to a number of Adobe apps, including Photoshop, bringing a conversational AI tool that allows creators to use natural language to ask the AI to carry out a specific task. The AI assistant allows users to type in a prompt and have the AI carry out the task.

These tools are made to work with the native tools in order to allow creators to keep full creative control. But the natural language element may also help novice creators build inside advanced apps without knowing all the advanced tools.

In Photoshop Labs, Adobe demoed the tool by asking for increased brightness of everything but the subject. The result is created in a layer, so the tool is non-destructive. Creators still have the usual Photoshop tools to use to perfect the results.

The AI assistant can also be used to ask for advice right inside of Photoshop.. In the demo, Adobe asked the AI to review a design layout. The AI suggested more contrast and even gave suggestions for how to fix that.

That conversational chatbot could potentially help new users who are using the AI for tasks that they don’t know how to do.

Adobe also demoed asking the AI to rename all of the layers for them, much to the delight of the audience. The AI does a visual analysis and renames the layers based on what’s inside them.

Is Photoshop about to be integrated into ChatGPT? Adobe just demoed that Express is arriving in ChatGPT, but there’s a hidden Easter Egg here – the drop-down menu shows the Photoshop icon too, hinting perhaps at future Photoshop integration into ChatGPT.

Adobe Firefly Image Model 5 is here, which Adobe says excels at generating realistic images with textures and lighting, and that’s generated at a native 4MP before upscaling.

The model can also edit the image with a prompt to tweak something while keeping the rest of the image consistent. Adobe says it's designed to change as much as you want, while leaving the rest of the image just as you want it.

Partner models allow creators to choose AI platforms from other companies and switch back and forth between them.

Firefly Boards is Adobe’s platform for creating mood boards and brainstorming ideas, including creating a space to generate ideas for a photo shoot.

Now, Boards is gaining presets, which will mix an image with a different style. Restyling will generate new images based on the selected style.

Collaboration is also coming to Boards, inviting others to edit and chime in on the ideas.

Boards also supports Partner Models such as Gemini 2.5, allowing users to mix multiple images together to create a new generation depicting an idea.

Creative Cloud users can open graphics generated in Boards to other platforms. Firefly's web-based editing tools can also be used to edit those generations.

Adobe Firefly is getting a new video editor in beta. In a demo, Adobe showed how the editor is integrated within Firefly, including moving back and forth between the video editor and Firefly’s image editor, as well as audio editing.

In the demo, Lucy Street uploaded a generated edit of an image as a reference, chose a style, then sent to to the Generate Video workspace. She then typed in a prompt, moving from the sketch style to the realistic image.

The video editor has a properties panel that adds controls like speed, duration, opacity, and scale. A timeline looks fairly similar to Premiere Pro.

The video editor also works with Firefly’s speech enhancement and background noise controls, and the before and after in the demo is quite impressive.

Street them demoed how the AI can highlight the pauses in speech, then delete them from the video. The video can also be edited by deleting text from the AI-generated transcript using text-based editing.

Firefly also now has audio capabilities to help create soundtracks for videos.

In the demo, Street used the video generator to take a mural of a turtle and animate it, making it appear like the turtle is crashing through the building in the final video.

The new video editor is in public beta, with the beta version beginning to roll out today. Through December 1, Adobe CC users have unlimited image and video generations with Firefly to try out the new features.

The newly announced Premiere Mobile app on iPhone is here, and now Adobe is showing off some features in a demo.

In a live voiceover, Adobe asked the audience to create some background noise. With the new Enhanced Speech tool, the app can help remove the background noise, and the before and after has quite an improvement.

Premiere’s infinite tracks allow creators to layer in photos, to stagger in how and when they appear in the video.

The app’s image-to-video AI allows users to generate a video from a photo using AI.

Generating sound effects is also incorporated into the app. This allows users to mix their voice and a text prompt in order to create a custom sound effect timed to match the video.

Looks are like Lightroom presets for color grading, but the mobile app also has a handful of color grading tools for fine-tuning.

Finally, the demo included text effects, including highlighting the words as they are said in the voiceover. The results can be imported into Premiere Pro on desktop for further editing.

The Premiere Mobile app is already available to download for free in the App Store, with an Android version in the works.

Adobe has announced a partnership with YouTube that allows Premiere Mobile to send videos right to YouTube Shorts.

The Premiere Mobile app will have YouTube Shorts templates, and viewers on Shorts will also be able to create a new video from that template inside the Premiere Mobile app.

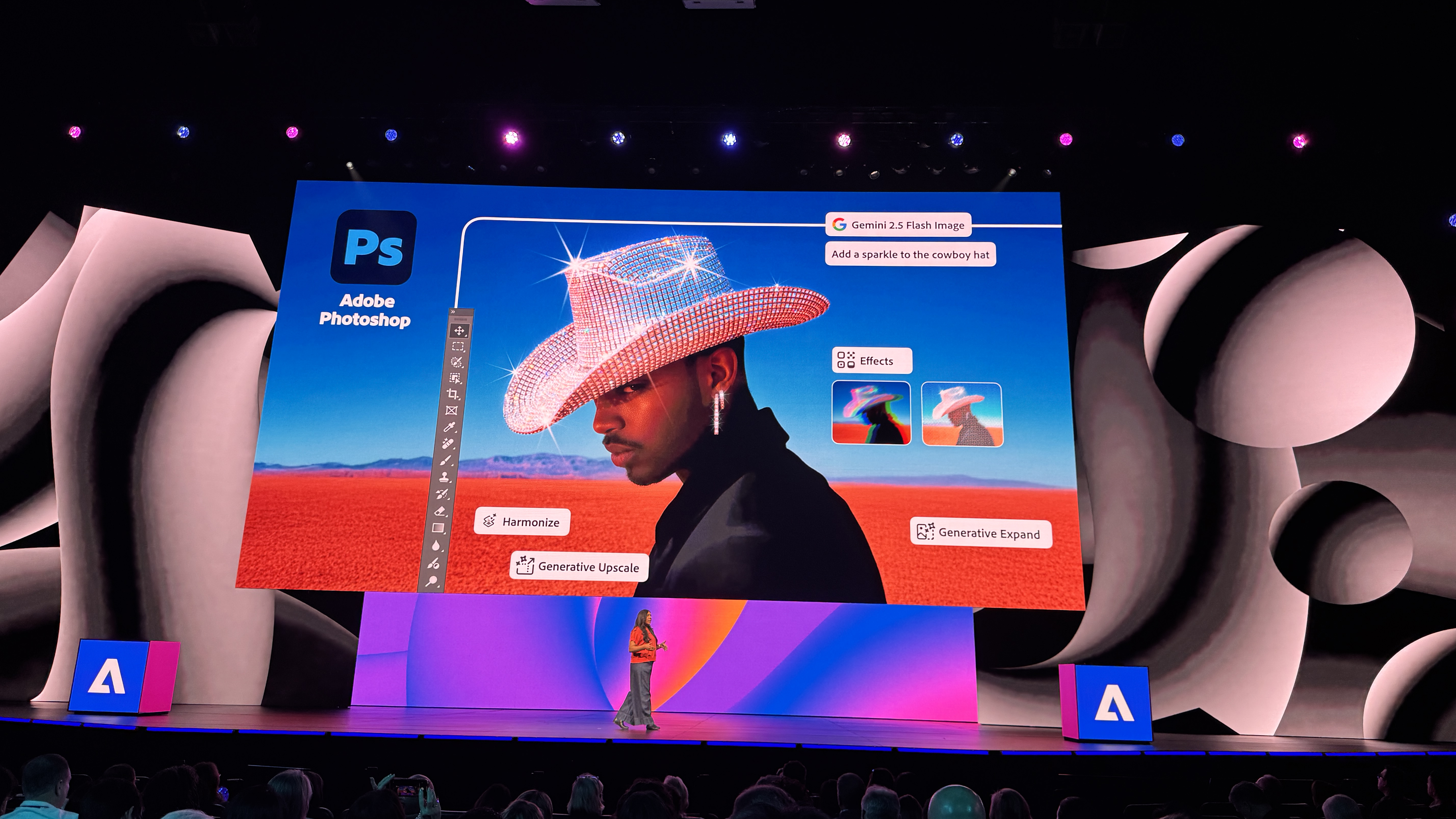

Generative upscale is coming to Photoshop, along with third-party models in Generative Fill. That brings some previously announced beta features into the full version of Photoshop, beginning today.

In the demo of updates to Photoshop, Adobe demonstrated a 2x upscale using a generated image from an earlier demo, using Photoshop’s AI upscaling to add more resolution.

Adobe also demoed the feature using a scan of an old family photo, using Topaz as the model to preserve faces. In the demo, the generative upscale seemed to preserve the faces, including a blinking bored child who still looked blinking and bored.

The demo also included some design features, including Dynamic Text, which automatically scales text so that each line is the same length, which adjusts automatically as the creator alters the text box.

In a composite, Adobe demonstrated mixing tools like remove background with Adobe’s precise tools. The Harmonize tool, previously in beta, is a one-click button on the AI toolbar that matches things like lighting and shadows when compositing images. In the demo, the AI even gave the astronaut added to the background a shadow, along with adjusting the coloring and lighting to better match the background.

Generative Fill is also adding support for Partner Models. In another image, Adobe used a selection brush to control where the generative fill added new elements, using Nano Banana as a partner model.

Now, Photoshop has a send to Firefly option, where Adobe then used the image to generate a video with Firefly’s new video tools.

Lightroom is getting an automatic dust spot removal tool.

In a demo of the new Lightroom CC features, Terry White demonstrated a new AI dust spot removal tool that both finds and removes the dust spots for you. As a photographer who has had dust spots happen before, I’m pretty geeked about this feature.

Lightroom is also gaining some new distraction and reflection removal tools.

The distraction removal tool will also remove people in one click. The tool is designed for tasks like removing tourists from vacation photos.

Reflection removal, first introduced in Adobe Camera RAW, is also coming to Lightroom. The slider uses AI to remove reflections, but take the slider the other way, and you can also enhance the reflection instead of removing it.

Lightroom is gaining AI-assisted culling.

Perhaps one of the tools that I’m most excited about from Adobe Max is Assistive Culling, which is an early access beta feature. This tool allows photographers to automatically sort through photos and reject photos with blinks and soft focus. Photographers can then review the results and go through and make sure those rejects are really rejects.

Photographers can then use batch actions, such as adding a flag to the selects, or a star rating. This allows users to use a check mark to apply a rating or flag or another setting to either all the selects or the rejects.

The tool will also create stacks of similar images, automatically sorting out stacks in the series. The AI will then put what it thinks is the best shot at the top of the stack. When photos are taken into Photoshop, the edits are added to the top of the stack.

Premiere Pro is getting an Auto Bleep tool.

Auto Bleep is a new tool in beta that allows video editors to censor words, highlighting them in the text panel. Users can create a list of censored words and then Premiere Pro will automatically bleep those words out. It also works with custom sound effects, in case you want cuss words to become a duck quack instead of a bleep.

Premiere Pro is gaining AI-assisted masking.

One of my favorite AI tools in Lightroom are the AI masks, but now similar tools are coming for video editing. Adobe demoed AI masking for Premiere Pro during Adobe Max, which was previously a Sneak.

The AI-powered Object Mask moves as the subject moves, maintaining the mask throughout the video. Adobe demoed the feature with a skateboarder an the tool looks quite impressive.

Additional Premiere Pro tools announced today also include new Film Impact tools, which are GPU-accelerated presets for transitions and effects.

The Premiere Pro demo was also heavily integrated into Adobe Firefly. Adobe showcased taking a video of a skateboarder from Premiere Pro into Firefly to change the ending, adding a new trick. This demo is particularly impressive because AI tends to have the toughest time with creating movement that still feels natural.

Firefly’s new ability to generate soundtracks is also a tool that integrates with Premiere Pro, giving video editors the ability to use AI to create a custom soundtrack quickly.

Here’s one for the photographers struggling with gaining traction on social media. Project Moonlight is an Adobe Sneak for a chatbot that’s designed to help creators on social media.

Project Moonlight integrates directly with Lightroom for importing images to the chatbot. Then, users can ask the chatbot to brainstorm some post ideas. In the demo, the chatbot came up with three ideas. Then, the demo used the AI to take the idea even further. The AI generated different things, like overlays on the image.

Project Moonlight can be integrated with Instagram, which is designed to help the chatbot understand how your particular posts perform and what resonates most with your audience. That also allows the chatbot to respond to questions about engagement and metrics. The chatbot can use that data to strategize ideas that are on brand with previous posts.

Project Moonlight can also be used to apply saved presets to images right in the chatbot. Users can then take the generations into Photoshop or Firefly Boards. Taking the images into Firefly Boards, Adobe demoed applying some of the AI-suggested overlays and ideas.

As a Sneak, Project Moonlight isn't yet available, but something Adobe has in the works for a future Firefly update.

One last Sneak before the day one keynote ends – Project Graph is a node-based tool for building creative workflows. This tool integrates features from several different apps to create a single workflow in one place.

Users can drag and drop in new reference images to take that image through the same editing workflow again, automating a process that would typically require opening up multiple apps.

In the demo, Adobe showed how users could build workflows from multiple apps, including Photoshop and Firefly. The workflow asked the program to take the reference image through multiple Adobe programs, including removing the background in Photoshop, generating a composite with a Partner Model, and outputting a video.

The “capsules” can also be worked in the individual apps themselves, giving users more flexibility and control by using things like Photoshop’s native tools.

That’s a wrap on the Adobe Max Keynote for day one. Stay tuned for further updates. On Wednesday, Max includes a second keynote in the morning, but I’m most looking forward to the Sneaks tomorrow night. That’s when Adobe teases the tech that it’s working on behind the scenes that may (or may not) be coming to Adobe software in the future.

Photoshop 2026 has some hidden features.

I sat down with Stephen Nielson, Senior Director of Product Management for Photoshop, to go through some of the newest features in Photoshop – and not all of them were teased during the keynote. Case in point: there's a new Adjustment Layer that works like Lightroom's temp and tint sliders. There's also new integration with Topaz Labs for AI sharpening and denoise, and, no, you don't need a separate Topaz subscription, but it does require generative credits.

Read the full list of features that I'm excited about in Photoshop 2026 here.

The Day 2 keynote is about the creative community.

“Creativity has never been about the tools; it is about the vision that every single day, with every breakthrough, someone like you is daring to say what if,” Lara Belazs, Adobe Global Chief Marketing Officer, said in her opening statement.

Today’s lineup includes Brandon Baum, who is known for special effects videos and will create something on stage with Adobe Firefly. That’s followed by the NASA-engineer-turned-YouTuber Mark Rober, and James Gunn, the award-winning director behind the Guardians of the Galaxy and more.

Brandon Baum says that AI has helped drop the barrier to entry in filmmaking and special effects. “[AI is] not some mighty piece that replaces the whole puzzle, but it does help us move through it faster…but more importantly, you can fail faster, so you can stumble upon gold more quickly.”

That’s an interesting take, the idea of failing faster – because one of the biggest ways creatives grow is through mistakes. Now, he’s walking through how he created a recent special effects video using Firefly and creating a new ending live on stage.

Mark Rober is now talking about creating viral videos – and even shared a glimpse of what his Premiere Pro timelines used to look like, and what they look like now. He says he only creates around 10 videos a year, so each one has to have a big impact.

What do all viral videos have in common? You just have to create a visceral response, Rober says. “It means if someone watches this video, it makes you laugh, amazed, inspired…they just need to feel something. If you don’t feel any one of these things, you don’t share the video, you don’t finish watching. That’s the easy part, the harder part is how do you do this?”

You don’t lay out the facts and specs, he said. You need a feeling, a story. While Rober may be talking more about video, I think this also applies to any creative industry, including photography. The best art tells a story, whether that’s video or photo.

Adobe’s Jason Levine is chatting on stage with director James Gunn.

The two started talking about origin stories, and Gunn started in music and went to school for writing prose, not filmmaking. “A phrase that’s overused is follow your dreams,” Gunn said. “I don’t believe in following your dreams because that’s following something that's outside yourself, that’s not the here and now…I loved doing music, but I wasn’t the best at it.”

Gunn says creativity is about being in the moment, whether that’s screenwriting or directing. “We judge what we are doing, and that is the enemy of any creativity. The thing is to create sh*t. Sit down and do something poorly…go through the phase of allowing yourself to turn off your self-judgement, because I think that’s where true creativity lies, then you’re free.”

That’s a wrap on the Day 2 keynote. Later today, Adobe will unveil Sneaks, which is a deep dive into tech that the software giant is working on behind the scenes, which may (or may not) be coming to future software updates. Sneaks kicks off at 5:30 PM PDT / 8:30 PM EST / 12:30 AM GMT. Sneaks will be co-hosted by Jessica Williams.

Adobe Max Sneaks 2025 are about to start

Head of Adobe Research Gavin Miller called Sneaks "the Olympics of demos." Miller indicated tonight's Sneaks have three themes: reimagining images, revolutionizing video and motion, and transforming sound and storytelling.

When I chatted with Miller earlier today, I learned some interesting insight into where Sneaks come from. Submissions are actually welcomed from everyone at Adobe, not just the research team, and there's multiple rounds before the finalists are chosen.

Sneaks are also often presented by the researchers and coders themselves. How the community – online and in person – responds can influence which Sneaks ship in actual products first.

Let's see what's in store next for Adobe at the 2025 Sneaks.

Project Clean Take is a video production tool that allows users to edit and regenerate sound, including separating sounds from complex tracks. First, the demo showed the tool highlighting text from the transcript and regenerating using AI. The demo included changing the inflection of a word, to changing the actual words themselves.

Then Lee Brimelow moved on to show an example of a bell sounding over the dialog in a video. Project Clean Take can break up the audio components directly into the timeline, separating speech, music, sound effects and reverb. Then, users can mute one of those tracks, which removes the sound of the bell.

Another use? When recording in a public location and there’s music in the background, but you don’t own the license. Project Clean Take has a Find Similar option, which will look and find something similar on Adobe Stock that can be licensed. A Match Acoustics button will help the soundtrack to sound more like the acoustics of the original video.

Back in the transcript, Brimelow demoed highlighting the entire transcript, and changing the emotion of the the speaker – or even altering the voice to a whisper.

Project Surface Swap is a Photoshop tool that can select surfaces, but also understand that it is a surface, including factors like reflections. With one click, Valentin Deschainte showed selecting the car in an image, then going on to use Photoshop’s adjustment layers to change the color.

But the name is Surface SWAP, and that’s part of the tool as well. Once the surface is selected, users can choose a different texture and, as the name implies, swap out the surface, changing a wood table to marble.

Finally, Deschainte demonstrated using the tool to place a logo onto a textured wall in a photograph. But, the wall has vines on it, so Project Surface Swap helped mask out the vines so the logo appeared on the wall realistically. With everything masked, you can even add a drop shadow to the vines.

Project New Depths is a tool for creating composites using a mix of 3D and photo editing tools. As Elie Michel added a tree to a photograph and moved it around, the objects in the photo layered properly with the tree so that it was behind a tractor and foliage.

But that’s when the demo got really interesting. Project New Depths was even able to rotate and change the angle of the photo, viewing the tractor from a different angle. That's because the background image is a series of photos taken at different angles and stitched together.

There’s also a lasso tool to create selections and make adjustments.

In another photo, Adobe demoed selecting part of a fountain and rotating it around on the base.

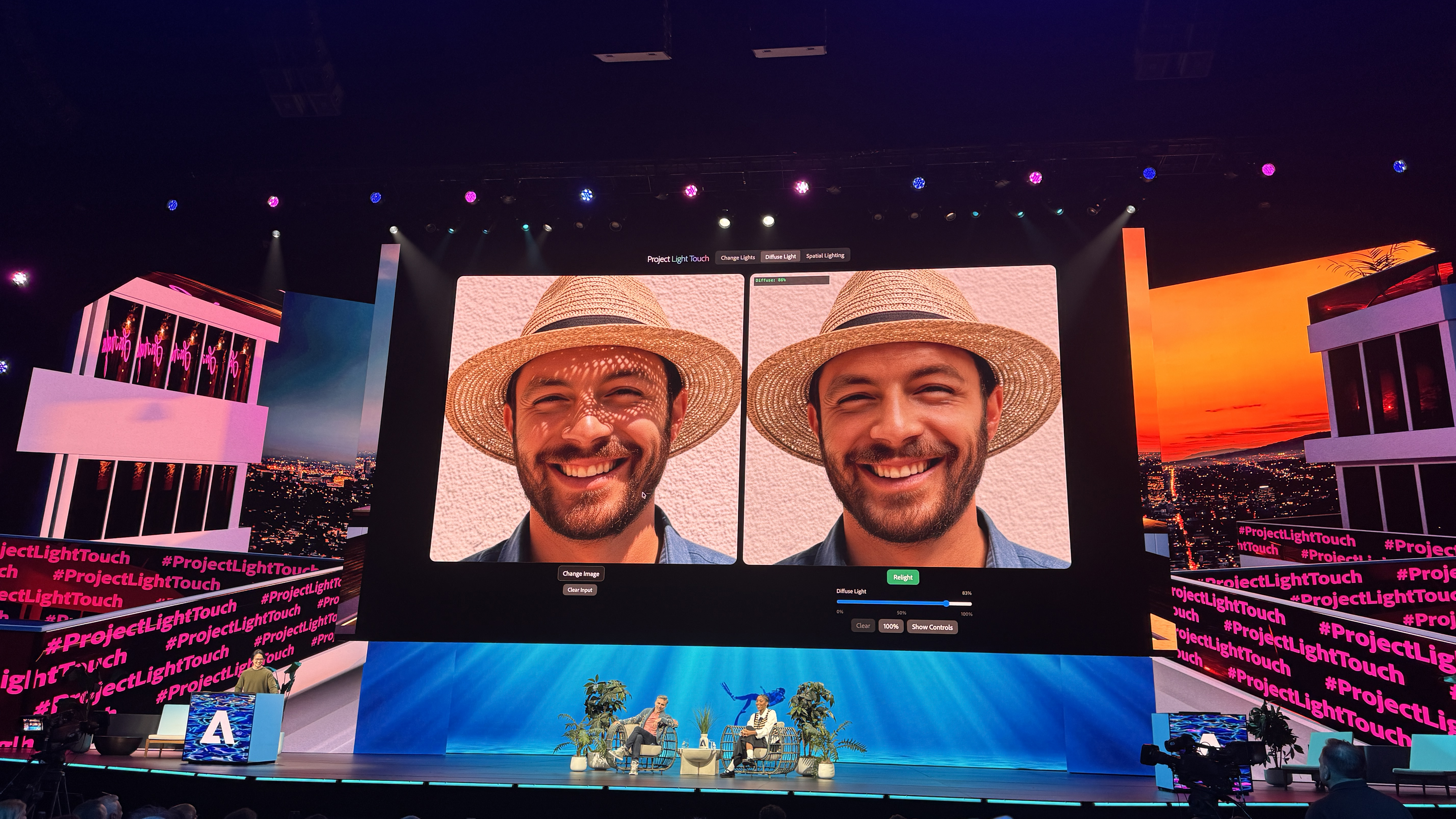

Project Light Touch is a software for relighting a photograph. In the first demo, Zhixin Shu showed a photo of a lamp, then used the tool to turn the light on, which affected the shadows and highlights in the surrounding room. But that's not the only way the relighting tool can be used.

In the second demo, Adobe showed a portrait with light filtering through a hat. Using Project Light Touch, the software relit the subject’s face, removing the shadows from the face as if the face were evenly lit.

In Spatial Lighting mode, users can add a virtual light in 3D and move it around. Then, upload an image and relight the image based on the 3D model. Users can go back to the 3D model and move the light around to change the effect, including putting the light inside a jack-o-lantern. Jessica Williams even took over to try, moving the light around the 3D model and watching how the image changed as a result.

Finally, Adobe demoed changing the color of the light using Point Controls, lighting up a witch’s cauldron with green light.

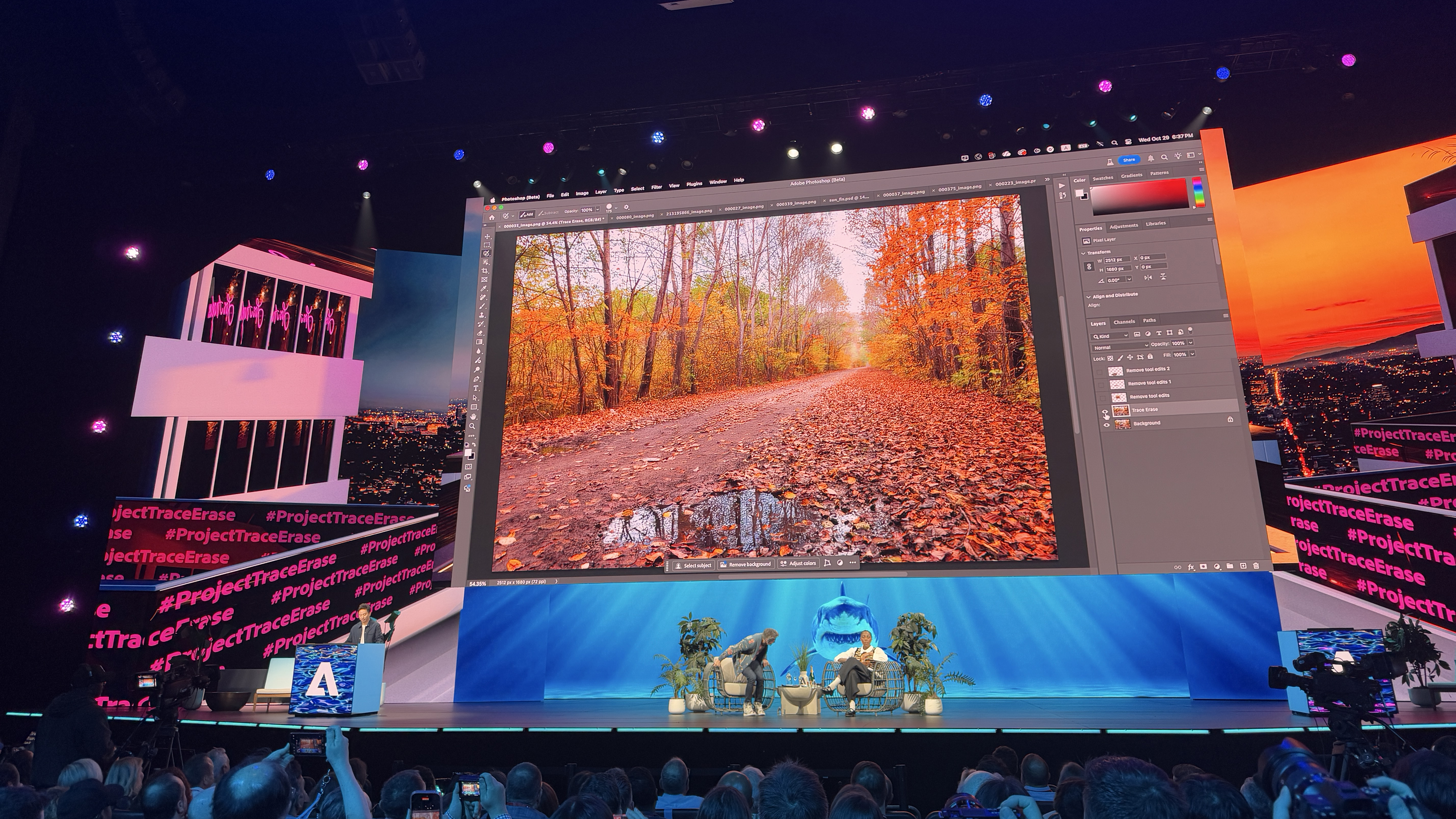

Project Trace Erase is the next generation of Generative AI removal tools. While Photoshop has generative remove tools, Trace Erase not only removed a vehicle from a photograph, but also removed its shadow and reflection. Current tools would remove a puddle, but the demo kept it intact.

Next, Adobe demoed that the tool even works when the subject isn’t fully selected, but still was able to remove a person, the shadow and the reflection.

That’s not all though, Trace Erase was also able to remove smoke from an image, then removed a lamp and even took out the light the lamp emitted.

In a photo with lens flare, Trace Erase was able to remove the entire sun flare, including the ghosting spots.

Adobe then leveled up with a photo of a snowy landscape and removed not only the person, but the person’s footsteps. The audience got real loud when the next photo was of a jet ski – Project Trace Erase was able to remove not just the jet ski but the wake behind it.

Project Frame Forward is a tool that makes video editing more like photo editing. It uses generative AI and takes the edit of the first frame of a video, then applies those changes to the entire clip. Using the earlier Trace Erase to remove a person from the first frame in Photoshop, Frame Forward analyzed the edited photo and removed the person from the entire length of the video.

The tool doesn’t use masking or tracking, which Adobe demonstrated by taking a moving race car out of a video with both the car and the camera moving.

Frame Forward isn’t just for removing objects. It can also be used to add objects, editing the first frame like an image, then letting the AI apply those edits to the rest of the frame. Impressively, Adobe included a puddle with reflections in this demo.

In the last example, Adobe showed a wedding video where a guest gets in the background and the bride’s dress is overexposed, along with the sky. Again, Frame Forward edited the first frame as an image in Photoshop, adjusting the light, removing the distractions, and adding a sunset to the overexposed sky. Back in Frame Forward, feeding the image to the software finished the edit, applying those changes to the entire video clip.

Project TurnStyle is another tool that may change how photo editors build composites inside Photoshop. A 3D rotate button on the Contextual Task bar allows users to rotate objects placed into the photo using AI. The tool also includes an upresolution tool to enhance the detail.

Using Photoshop’s new Harmonize button, Adobe demonstrated blending that newly rotated object with the background.

Finally, Adobe demonstrated adding people to the photo, and using TurnStyle to change the angle of how they are standing. As the people turned, the faces looked a bit like wonky AI faces with mushed details, but after using the upresolution button, those AI oddities on the faces disappeared.

That's a wrap on Sneaks for 2025!

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.