Are Samsung phones faking the images they capture of the moon?

Samsung's Space Zoom camera feature seems to be working rather too well. We smell a rat...

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

You are now subscribed

Your newsletter sign-up was successful

It seems some Samsung phones can take photos of the moon that push the boundaries of what many would consider 'real'. An argument could even be made that moon shots taken using Samsung's 'Space Zoom' function were so over processed they're now simply fake. But that implies a degree of malevolence, whereas the issue here is more about overzealous computational image processing, as well as Samsung's vague explanation of how Space Zoom works.

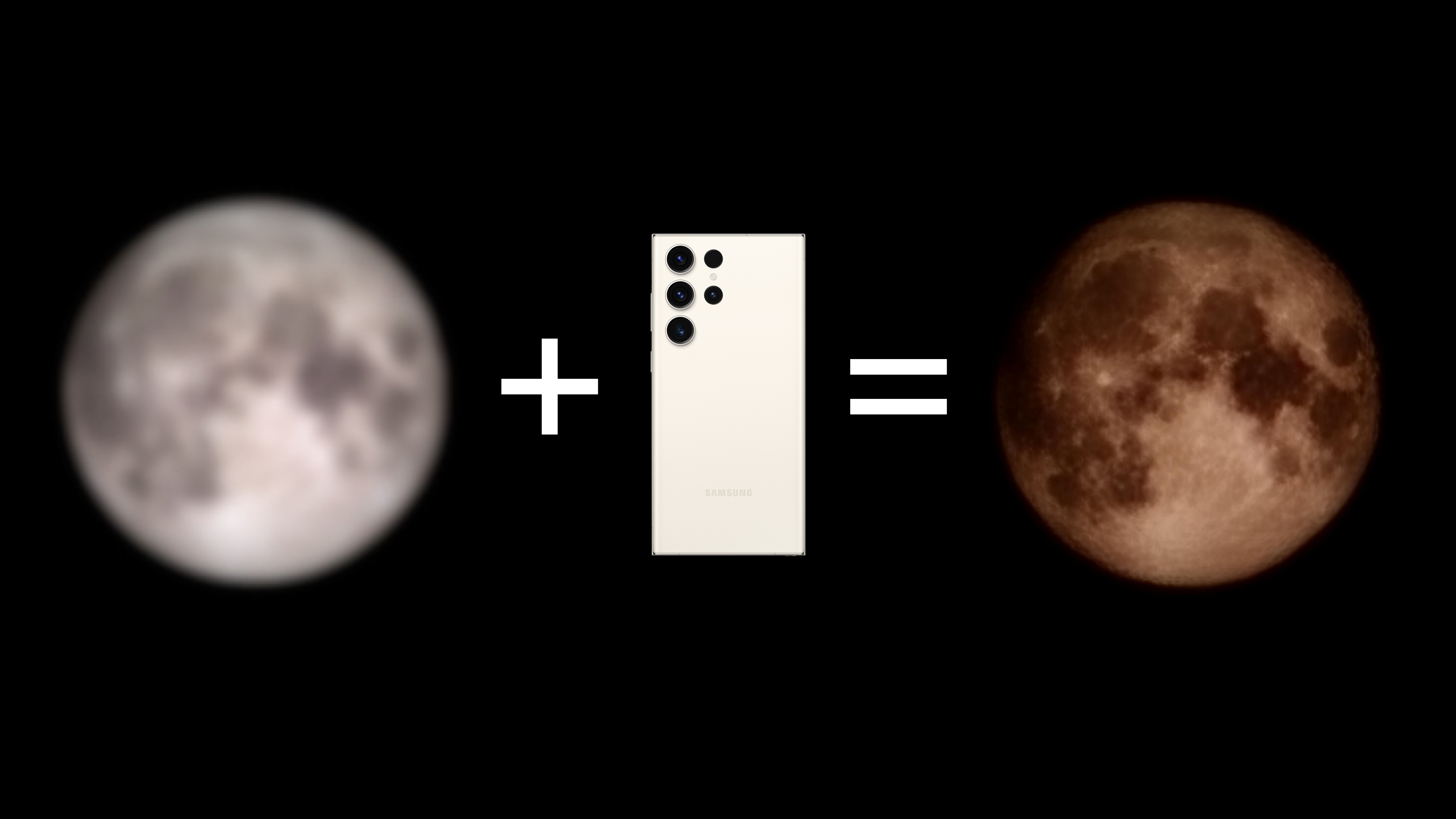

The story has come to light thanks to Reddit user u/ibreakphotos. To test the power of the Space Zoom feature in some Samsung phones, they created a deliberately blurry image of the moon, displayed it on a computer monitor, and then photographed that image using a Galaxy S23 ultra.

And that's when the fun begins, as the S23 proceeds to turn the blurry original image into an impressively detailed lunar depiction that's almost a world apart from the blurry mess of the starting shot.

Here's the Space Zoom process in action:

And here's the end result, after the S23 Ultra has done its thing:

Quite the transformation!

It's no secret that camera phone image processing is exceptionally advanced - it makes the processing in conventional standalone cameras look positively prehistoric by comparison. But what we have here goes further than what many people would define as just 'processing' - basic sharpening and a contrast boost, etc. The image generated by the S23 Ultra clearly has detail where no such image information existed in the original shot. The phone seems to essentially be using the blurry image as a basic reference for light and dark regions, and is then adding its own detail 'layer' which simply didn't exist in the blurry first image.

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

Some have speculated the phone is applying its own pre-set textures onto the original image, then blending them to fit with the light and dark areas the camera actually captures. Such a technique makes sense given the results above, however in a 2021 article by Input Mag, Samsung told the publication that “no image overlaying or texture effects are applied when taking a photo”. Instead, AI is used to detect the Moon’s presence and then "offers a detail enhancing function by reducing blurs and noises.” In a further clarification, Samsung stated it uses a “detail improvement engine function” to “effectively remove noise and maximize the details of the moon to complete a bright and clear picture of the moon”. A rather vague explanation at best, and one which doesn't actually explain what's going on here.

Sure, image enhancement/sharpening/upscaling algorithms are now prevalent in phones and image editing software, but it seems the Space Zoom feature is doing more than that. After all, you can't extract detail where there is no detail in the first place. This isn't Hollywood, or even a raw image containing highlight or shadow detail that simply has to be recovered so it becomes visible to naked eye. In the case of the moon shot, we just have a very low resolution starting point with nothing left to recover or extract.

You can see why Samsung might want to play down more extreme forms of computational image processing. The increasing prevalence of AI-generated images is inevitably attracting attention - positive, sceptical, and negative - and is a controversial topic. But should it be? Even in the days of film photography, dodging and burning areas of a developing print changed its appearance from how it was originally captured, and since the advent of digital image manipulation, it's been possible for an edited image to bear little resemblance to the shot first recorded by the camera.

We reckon the issue here is more about transparency. It's common - and largely accepted - knowledge that Photoshop (and Photoshop alternatives) is designed to turn an image into something almost entirely different with enough skilled user input, whereas Space Zoom is marketed as just another smartphone camera feature which simply lets you take attractive shots of the moon. The result being an image which a user could well assume is a reasonably faithful output of what the camera actually captured, maybe with just a little extra sharpening and color/contrast adjustment to give the shot some extra punch. And yet Space Zoom, if u/ibreakphotos's tests are accurate, is clearly doing more than that, to the point that the end image appears more manufactured than captured. All the while, the end user is under the impression that the opposite is true, thanks to Samsung's vague explanation of what's going on behind the scenes.

Is this the tip of the AI fakery iceberg, or just a benign example of clever smartphone processing continuing to give us better photos? You decide.

Story credit: u/ibreakphotos, via The Verge

Ben is the Imaging Labs manager, responsible for all the testing on Digital Camera World and across the entire photography portfolio at Future. Whether he's in the lab testing the sharpness of new lenses, the resolution of the latest image sensors, the zoom range of monster bridge cameras or even the latest camera phones, Ben is our go-to guy for technical insight. He's also the team's man-at-arms when it comes to camera bags, filters, memory cards, and all manner of camera accessories – his lab is a bit like the Batcave of photography! With years of experience trialling and testing kit, he's a human encyclopedia of benchmarks when it comes to recommending the best buys.