The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

You are now subscribed

Your newsletter sign-up was successful

AI image generation is one of the most dramatic developments the creative fields have seen for some time, and 2022 was the year that it truly exploded. In a space of a few months, a new technology seemed to appear out of nowhere and evolve at lightning speed, allowing anyone to create an image of practically anything they want merely by typing a description.

Generative AI has provoked a lot of controversy along the way, and exactly where it will lead us remains in doubt, but it seems inevitable that the impact of AI image generators is going to be felt on photography as well as on digital art and illustration. As we reach the end of a year that saw the technology go from a niche curiosity to an easily accessible tool, we round up the milestones that marked the way and that will make us remember 2022 as the year that AI changed photography.

AI image generation takes a huge leap forward

The use of artificial intelligence to create images isn't new. Harold Cohen's AARON has been around since the 1970s. But things have accelerated massively over the past decade with technology like generative adversarial networks (GANs) and Google's DeepDream. This year saw an explosion of a new generation of text-based diffusion models based on massive datasets. They're easier to use, more faithful to the data, and they're capable of turning out stunningly accurate results.

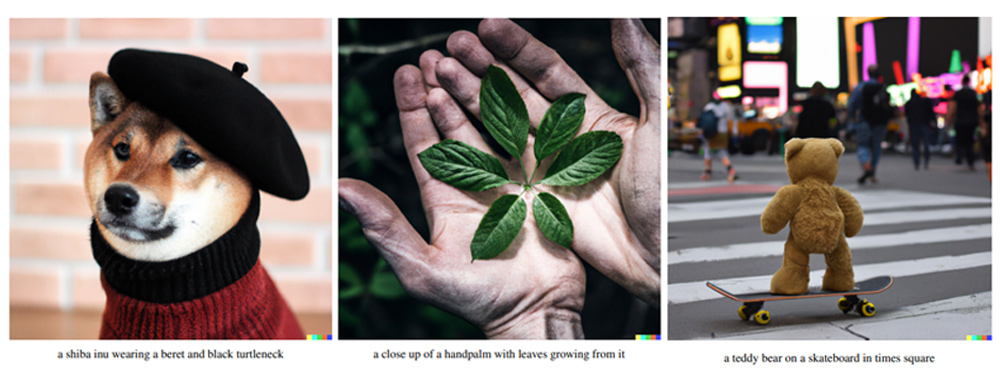

In April, OpenAI launched DALL-E 2. A portmanteau of 'Dali' (as in Salvador) and Pixar's 'WALL-E', it offered a massive upgrade on its predecessor, with more realistic results and four-times higher resolution. Although there was initially a waiting list, within a couple of months a million people had access and the internet was flooded with surreal, phenomenally detailed images of winged astronauts, urban penguins, muppet fashion shows and Rubik's cubes made from sushi that could easily pass as photographs. People's minds were blown.

• See our guide to the Best AI image generators

AI image generation for all

A lot of AI image generators are being tested in very closed trials, but in August came a release that would massively expand access to the tech. Stability AI's Stable Diffusion is an open-source tool, with publicly available code and model weights. Anyone with the technical knowhow could run it on a consumer PC with a fairly pedestrian GPU. No more waiting to get access to a cloud-based service.

Developers began using Stable Diffusion for their own apps or plugins, often providing further training to the model (as we'll see later). People have also created plugins for Photoshop and GIMP, and the graphic design platform Canva has officially incorporated Stable Diffusion to allow text-to-image generation. Along with expanded access came huge controversy. Stable Diffusion's open policy was criticised for permitting potentially nefarious use, from the copying of artists' styles to creating non-consensual porn or abusive image. But it's impossible to deny Stable Diffusion's contribution to the mainstream expansion of AI imagery.

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

Photographers start to experiment

A post shared by Alper Yesiltas (@alperyesiltas)

A photo posted by on

The immediate possibilities for AI image generation were perhaps more obvious for illustration, but photographers at the boundary between traditional photography and digital art were quick to start exploring its possibilities. With illustration, anything is already possible, in theory. Photography is more constrained by reality, but now it's now fairly easy to create images that look like photographs but show entirely unreal and even impossible things.

The ability to create and modify the likenesses of famous people is a controversial aspect of generative AI, but many found the Turkish photographer Alper Yeşiltaş's 'As If Nothing Happened' to be a touching tribute to late celebrities. He used AI image generation to try to create lifelike images of how celebrities might look today had they not died so young.

A post shared by Mathieu Stern (@mathieustern)

A photo posted by on

The French photographer Mathieu Stern found that AI could also create stunning product photography of cameras that he could only dream of. He used DALL-E 2 to generate stunningly real-looking images of imaginary cameras inspired by everything from Star Wars characters to Qing dynasty porcelain.

Re-imagining my own images with Artificial intelligencehttps://t.co/1Efn9Ltgc4 #ai #aiartist #midjourney pic.twitter.com/5YcJNh6qTNNovember 9, 2022

And it didn't take long for photographers to start testing how AI-generated creations would compare with their own work. The photographer Antti Karppinen uploaded some of his photos to Midjourney, typed in text prompts describing them and shared the astounding results. "This industry is changing many things, and also disrupting industries. How we create images is not the same anymore," he says on his blog.

Big players show their hands

We’re pleased to introduce Make-A-Video, our latest in #GenerativeAI research! With just a few words, this state-of-the-art AI system generates high-quality videos from text prompts.Have an idea you want to see? Reply w/ your prompt using #MetaAI and we’ll share more results. pic.twitter.com/q8zjiwLBjbSeptember 29, 2022

The main players in AI image generation at the moment might seem like new names (although some have big backers; Elon Musk and Microsoft are investors in OpenAI). But better-known tech giants are developing tools that might be even more powerful.

Google's Imagen is being talked about as the most powerful AI image generator currently in existence. The company's just not letting anyone use it. Google says it's keeping Imagen under wraps until it can find a responsible way to unleash it on the world. So far, it's its AI Test Kitchen app is offering only City Dreamer, which can create SimCity-like isometric designs, and Wobbler, which lets users create and dress little monsters.

Microsoft has plans to add an AI image generator to Bing, which would let people generate images without having to go to a dedicated app. And Meta is developing Make A Video, which brings the same kind of text-to-image wizardry we've seen with stills to moving images.

DALL-E 2 opens access to all

Nice man Dall-e is allowing faces again. He is me as a wwe wrestler taking a bath pic.twitter.com/bwoCHIDylFSeptember 19, 2022

For five months, there was a waiting list for DALL-E 2. But at the end of September, OpenAI opened the gates to allow anyone to use the beta. It said it had used the time to develop safeguards by "learning from real-world use" so it could open access while preventing abuse.

It introduced a bunch of new tools too, including outpainting, which lets users expand an image beyond its original borders. Cropped a photo too far and lost the original? Didn't have a wider enough lens? With outpainting, you can use AI to generate a wider composition outside the original frame. OpenAI also removed a ban on editing faces, allowing anyone to use its image-to-image tool to upload a photo of a human and generate variations.

Stock libraries take stances

Anyone concerned about the rise of AI imagery may have taken comfort in September when several sites banned AI-generated images. The popular portfolio and networking website PurplePort prohibited "100% machine-generated images" in order to maintain its focus on human-focused art. Owner Russ Freeman said he saw AI-generated images as deceitful and trivial.

Soon afterwards, none other than the stock giant Getty Images followed suit, in this case due to copyright concerns. It cited “open questions with respect to the copyright of outputs from these models" and "unaddressed rights issues with respect to the underlying imagery and metadata" used to train them.

It seemed the fight back against the machines had begun. But just one month later Shutterstock, which claims to be the world's largest stock image site, did what for some was seen as a deal with the devil. It announced a collaboration with OpenAI to sell images created using DALL-E 2. And more than that, it plans to provide access to DALL-E 2 directly in its own website. It says photographers whose work was used to develop the technology will be compensated through a Contributor Fund and that photographers will receive royalties when the AI uses their work.

A few weeks late Adobe announced guidelines for the Adobe Stock marketplace. It's allowing AI-generated images to be submitted as long as they're labeled as such and aren't generated using prompts that refer to people, places, property or an artist’s style without authorization. These are big moves that could eventually impact on photographers who make money from selling stock imagery.

Mobile apps take AI image generation mainstream

A post shared by Megan Fox (@meganfox)

A photo posted by on

As easy to use as they are, AI image generators are still something of a niche interest among developers, creatives and those sufficiently curious to seek them out. But it didn't take long for mobile apps with mass appeal to appear or to add AI features to their existing tools.

MyHeritage's AI Time Machines and Lensa's Magic Avatars both use adaptations of Stable Diffusion and teach the AI to recognise the faces on images that users upload. The former can turn selfies into impressively realistic fantasy historical portraits. The latter can turn selfies into portraits in a range of styles – it seems, often with little in the way of clothing if you're female.

What next for generative AI?

Make any idea real. Just write it. Text to video, coming soon to Runway.Sign up for early access: https://t.co/ekldoIshdw pic.twitter.com/DCwXcmRcuKSeptember 9, 2022

Generative AI has been described as a Cambrian explosion of capability, and what we’ve seen so far is almost certainly just the beginning. The advances go beyond imagery and cover everything from scientific research to programming – yes, AI writing more AI.

In imagery, text-based generative AI is very close to mastering still images. Now, video is coming. Meta, the owner of Facebook, is working on its Make-A-Video generator, Google AI is working with video in its unreleased Imagen and RunwayML is adding generative AI to its video editor.

We're likely to see more AI image generators specialising in particular types of images as developers add modified versions of the likes of Stable Diffusion and DALL-E 2 to their own tools with extra training using specific datasets. And generative AI is likely to creep into more mainstream tools.

Adobe’s Project All of Me revealed at this year’s Adobe MAX looks set to introduce features not unlike DALL-E 2's inpainting and outpainting to Photoshop, 'un-cropping' photos and allowing users to do things like switch a subject’s clothing. Photoshop already has a prompt-based Backdrop Creator in beta. From here, it's not hard to imagine Photoshop directly adding text-to-image generation to its editing programs, which some people are already doing using plugins.

Ethical and legal questions will remain. Legislation will need to catch up to provide definitions on the issues of rights and ownership. And it may become more difficult to vouch for the authenticity of photography as it becomes impossible to differentiate an AI-generated image from a photo taken with a camera. At least until we have some kind of app that can tell us how an image was created.

AI is changing photography as the line between reality and fiction becomes more blurred, but it isn't going to kill the camera yet. People are still going to want genuine images of people, places, products, events and more, and AI can't create an image of anything that hasn't already been depicted in photography or illustration. AI-generated images could start to replace photographs in very specific areas, like lifestyle stock imagery. And since the technology can be used to transform photos as well as to create images from scratch, it might start to become part of photography workflows for more professionals.

Read more:

Joe is a regular freelance journalist and editor at our sister publication Creative Bloq, where he writes news, features and buying guides on a range of subjects including AI image generation, logo design and new technology. A writer and translator, he worked as a project manager for at Buenos Aires-based design and branding agency Hermana Creatives, where he oversaw photography and video production projects in the hospitality sector. When he isn't learning exploring new developments in AI image creation, he's out snapping wildlife and landscapes with a Canon EOS R5 or dancing tango.