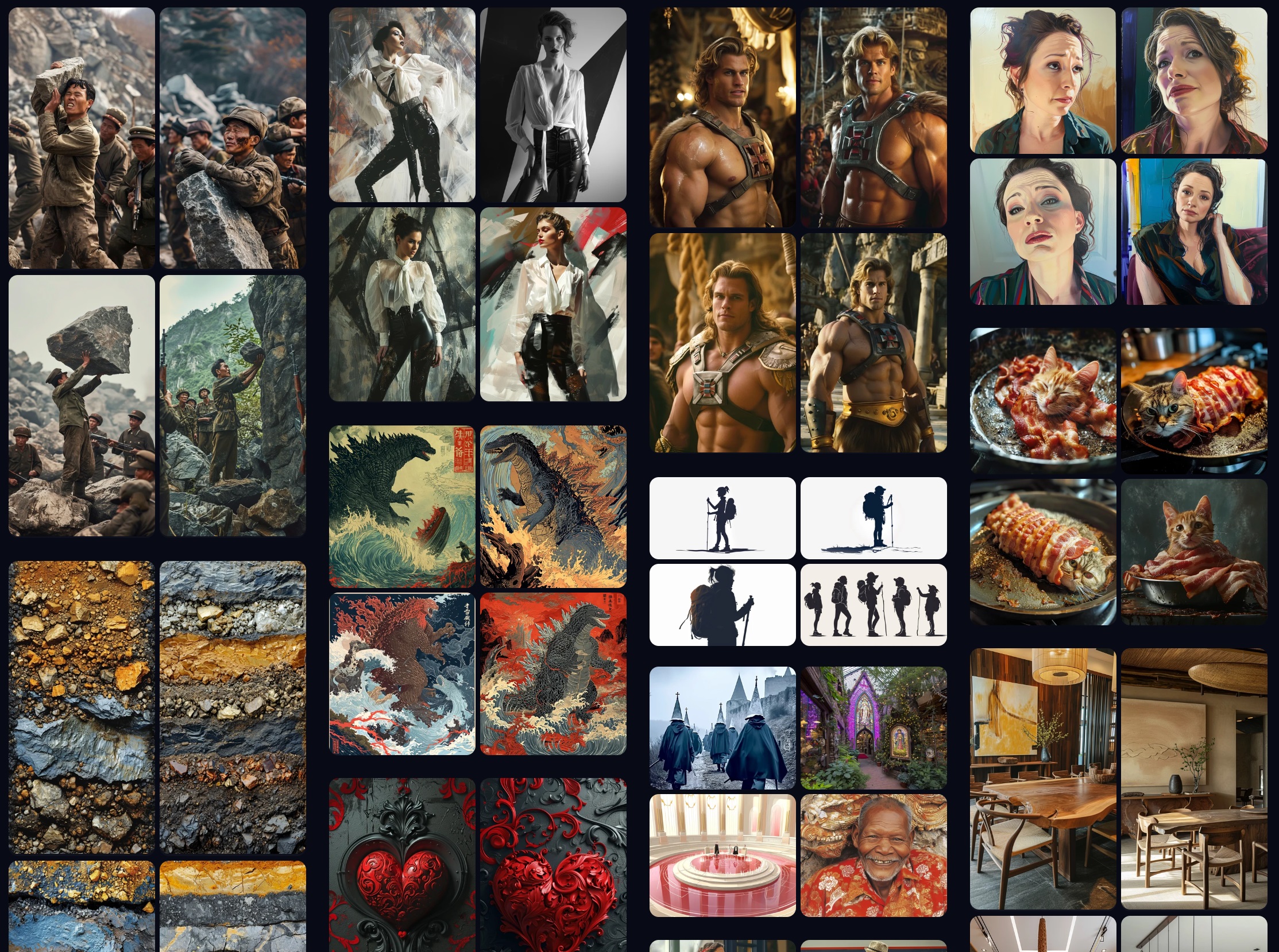

The best AI image generators

The best AI image generators for photo-editing, digital art and more.

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

You are now subscribed

Your newsletter sign-up was successful

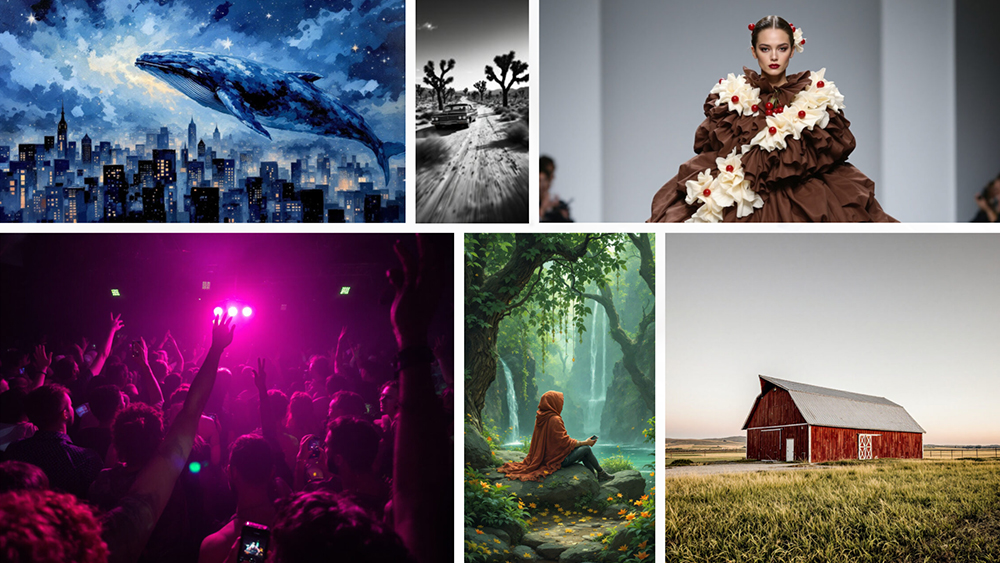

The best AI image generators can turn text descriptions into stunning photorealistic or imagery in a wide range of artistic styles. Many can also be used to create backgrounds or other elements for photo editing and compositing.

Generative AI remains controversial because of unresolved copyright issues and the fear that it could put some creatives out of work, but it's made it to many of the most popular photo editing software programs, and AI-generated content is now everywhere. It's getting to the point where most people working in visual arts need to at least know about the tools, and that includes photographers.

Most of the best AI image generators work in a similar way, but some are more reliable, produce more realistic results, provide more tools or have more intuitive interfaces. I've tested and reviewed the most popular options to pick out the best AI generators for different needs, including for working photographers. Below, I compare the pros and cons of each and suggest who they're most suited for. See the questions section at the bottom to learn more about how AI image generators work.

The Quick List

Visit site

It isn't the best for fully blown image generation, but we think the industry-standard tool for image manipulation's text-to-image tools like Generative Fill and Expand are the most practical and useful for photographers who want to use generative AI in their workflow for editing or compositing.

Read more below

Visit site

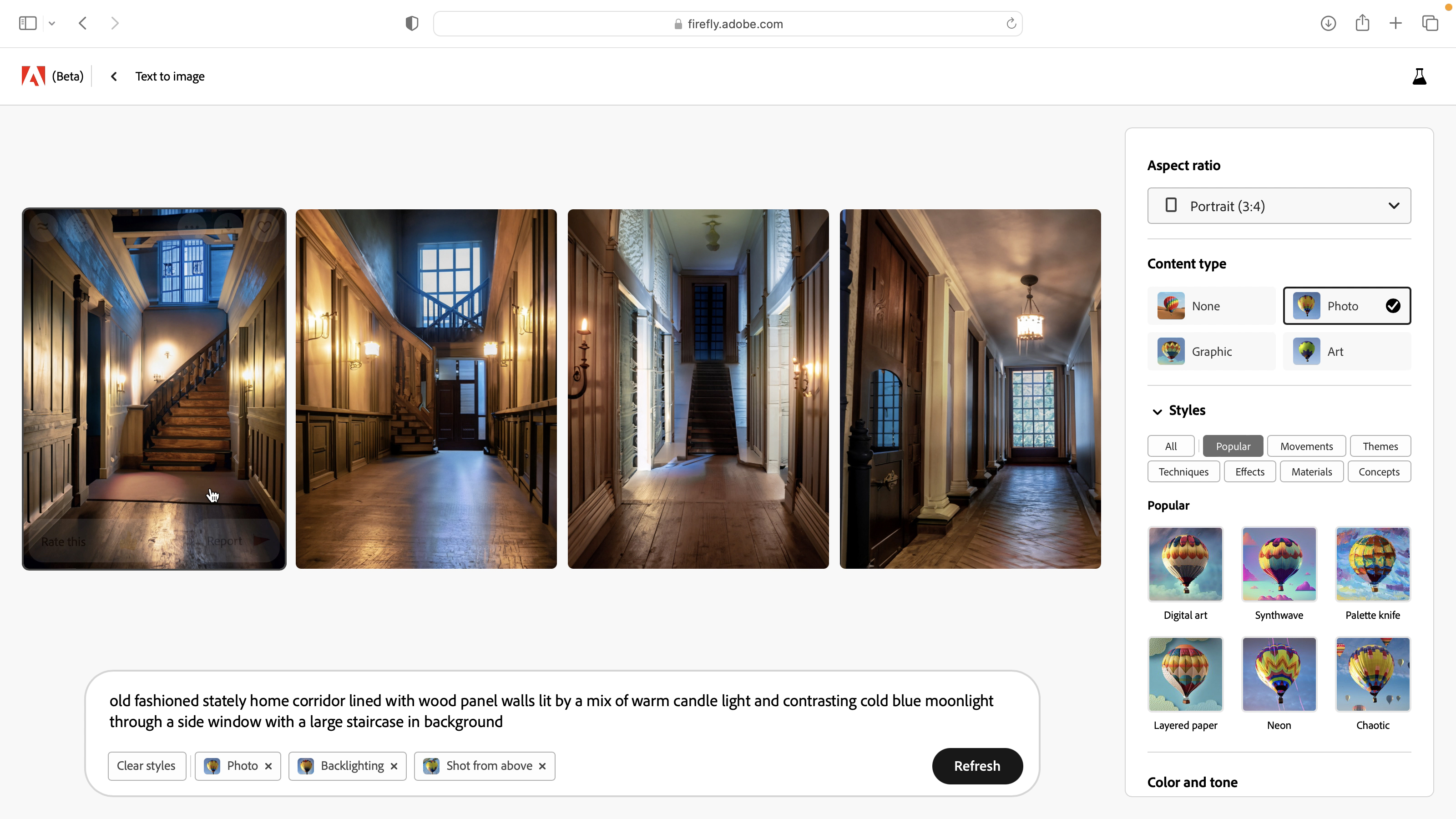

We think Firefly is the best AI image generator for broader design work. As well as the image generator, there's a vector generator and recolor tool, text effects generator and more. It's trained on rights-free images, making it less controversial. The catch is that the tools are spread over various Adobe apps.

Read more below

Visit site

To generate images from scratch using AI, Midjourney continues to lead the pack in terms of photorealism and variety of styles. Its latest iteration, Midjourney V6.1 provides stunning detail, convincing lighting and reliable prompt interpretation, and it now has a dedicated website.

Read more below

Visit site

AI image generators can feel unfamiliar, but DALL-E 3 is super easy to use since its integrated with ChatGPT, which makes interacting with it more like having a conversation, while ChatGPT can help you refine your prompts.

Read more below

Visit site

Flux Dev and Schnell are open-source AI image generators that are available to use for free, either locally or via various websites. If you have a powerful-enough computer to run it locally, you get the benefits of privacy, control and the ability to use fine-tuning scripts.

Read more below

Visit site

AI video generation still isn't as advanced as image generation, but it's developing fast. Adobe's starting to roll out tools, but for now, we think Runway AI is the most complete option with public access. Its motion brush tool can be used to animate still images.

Read more below

The best AI image generators in full

Why you can trust Digital Camera World

The best AI image generator for photographers

1. Photoshop

Our expert review:

Specifications

Reasons to buy

Reasons to avoid

You might be surprised to find a photography and digital art stalwart at the top of our list of the best AI image generators, but hear me out. If you're a photographer looking to use generative AI as part of your editing or compositing workflow, I think Photoshop is the most practical and useful tool to use. For a start, you may already have it or be considering it for other parts of your workflow, whether it's for compositing, retouching or using adjustment layers.

Photoshop's generative AI capabilities are powered by Adobe Firefly (see below). They include Generative Fill, which allows you to select an area of an image and replace it with something else by writing a text prompt, and Generative Expand, which allows you to expand a photo beyond its borders – very handy for those occasions when you wish you'd taken a slightly wider shot. There's also now Generate Image for fully fledged AI-image generation.

I find that the latter tool can't compare with the likes of Midjourney for generating realistic imagery from scratch, but Photoshop's other AI image generation tools are fantastically practical. Generative Fill can be used for removing unwanted objects in a way that's much cleaner and more powerful than the legacy tools like the Clone Stamp and Content-Aware Fill.

But it can do much more besides, from retouching to stylising an image, changing backgrounds and adding completely new elements for those leaning more towards the digital art side of photography. It can potentially saving hours of work for those who make digital collages or composite images. And best of all, this is Photoshop: so you have dozens and dozens of other useful tools for image editing.

Photoshop subscribers automatically get access to updates, and we expect Adobe will be introducing more Generative AI tools in the future. Of course, it's that monthly subscription that's also the main downside to Photoshop. Using the generative AI features also costs what Adobe calls 'generative credits'. You get a certain number of these included every month with your subscription, and you can buy more. You can still use the tools when your credits run out, but generations will run more slowly

Read our full Photoshop review for more details.

The best AI image generator for design

02. Adobe Firefly

Our expert review:

Specifications

Reasons to buy

Reasons to avoid

Photoshop's generative AI tools are powered by Firefly, and if you take out a Creative Cloud All-Apps subscription, you get full access to a range of Firefly-powered tools. Firefly is actually free for all to try, although non-subscribers are limited to just 25 free monthly generative credits, enough for just 25 generations.

Firefly has a fully fledged image generator, but also has a wide range of other generators that can be used to recolour vectors, create text effects or convert 3D images to 2D, and more are in development. In my tests, I've found the results of the image generator to be more limited that Midjourney, perhaps because it has been trained on fewer images (and only on licensed images), and photo styles tend to look more cartoonlike. However, image quality and reliability have improved massively with the update to a new model in mid-2023.

As for that training, a big selling point of Firefly is Adobe's claim that it's a more 'ethical' AI image generator. It was trained on licensed images from the Adobe Stock library, and content in the public domain. This has helped Adobe avoid some of the controversy (and legal battles) related to AI image generators. Adobe even promises to indemnify Enterprise users if they get sued for using it.

Another part of Firefly's special sauce is that it integrates nicely with Creative Cloud tools such as Photoshop, Adobe Express and Adobe Illustrator. There's also now Firefly-powered Generative Extend for video in Premiere Pro. That said, you'll need a Creative Cloud subscription to take advantage of all of these tools. For more details, see our article on How to make Firefly work for you.

The best AI image generator for photorealism

3. Midjourney

Our expert review:

Specifications

Reasons to buy

Reasons to avoid

Midjourney has established itself as one of the most popular AI image generators thanks to its ability to produce a wide range of styles of imagery, and, in particular, very realistic-looking photographic styles. That viral image of pope in a puffer coat? That was Midjourney.

The latest model, Midjourney V6.1 is even more powerful, capable of stunning detail in skin and hair, more reliable interpretation of prompts and much, much better handling of text in images. It's now possible to put phrases of text in images by including them in the prompt, although, for longer phrases, I found the results still aren't as reliable as Ideogram (see below).

I've found the generation of human figures to be much more accurate now, at least for the main subject of images (so no more jokes about six fingers on each hand). I've also been impressed by the consistency of characters generated across multiple images when using the 'cref' (character reference) tag. It's been intentionally designed not to work for images of real people, but it works well when used with an image generated by Midjourney featuring a single character, and makes it much more viable to use the generator for things like storyboarding since characters look similar across shots.

You can also upload your own images to use as references for compositions, but Midjourney doesn't provide anything like the precise AI-driven editing tools that you find in Photoshop – for example, you can't upload an image of yourself and change the background like you could with Photoshop Generative Fill. That makes it less viable as standalone app, since whatever you do, you generate you're likely to want to make further edits in a more traditional piece of software.

Still, Midjourney provides the most control that I've found for generating new images, allowing a wide range of parameters to be specified through a range of prompt codes. On the flip side, that can make it more daunting and more difficult to learn than a tool like DALL-E 3 (below) since there are various commands and syntax to learn to craft successful prompts

Using Midjourney on the social platform Discord takes some getting used to, but there's not the option to use Midjourney through a dedicated website, which is much more intuitive to use. There's also a big community, and it's possible to learn a lot from seeing other people's work, prompts and tutorials.

Midjourney only occasionally offers free trials for limited periods. Basic membership costs $10 a month. That allows you up to three concurrent jobs and 3.5 hours of GPU time a month. Plans go all the way up to the 'Mega plan'. At $120 a month, this gives you up to 12 fast concurrent jobs and 60 hours a month of fast GPU time.

The best AI image generator for ease of use

4. DALL-E 3

Our expert review:

Specifications

Reasons to buy

Reasons to avoid

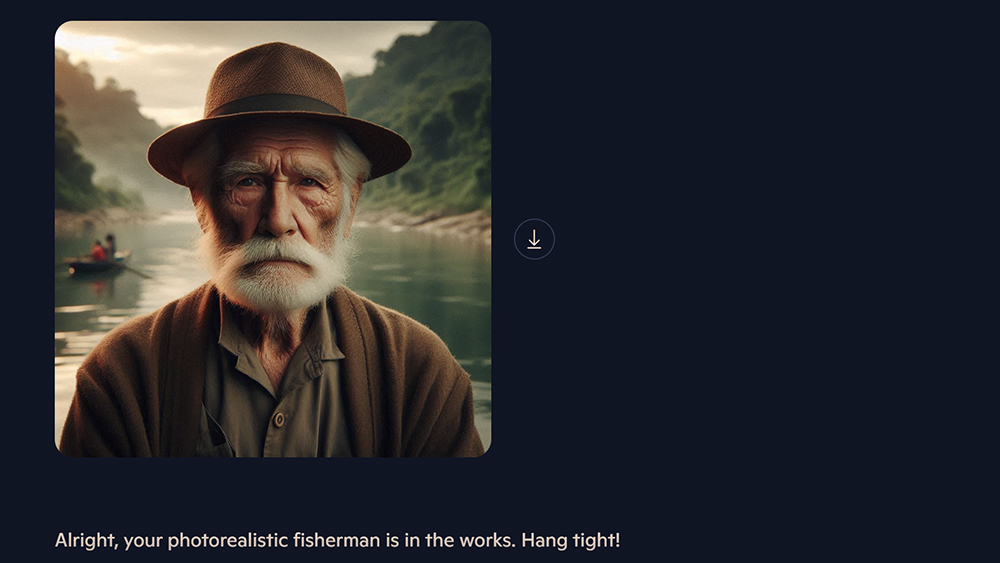

OpenAI's DALL-E 3, released in late 2023, is its most powerful model yet, and it also benefits from integration with ChatGPT, Open AI's chatbot. This integration means that you can ask ChatGPT for help creating your prompts using more conversational language.

This can allow a novel back-and-forth dialogue through which you speak with ChatGPT asking it to improve the images that were generated. The downside with access with ChatGPT is that it's more expensive, requiring a subscription to ChatGPT Plus for $20 a month.

You can also use DALL-E 3 for free via Bing Chat and Bing.com/create and via Microsoft Copilot, but we find that the results tend to be less detailed and accurate than when using the model in ChatGPT. In all cases, I found the images generated to be coherent and to accurately reflect text prompts.

But while DALL-E 3 produces more retailed and realistic results than its predecessor, its photography styles are unlikely to fool anyone into thinking that they're not AI-generated. They have that cartoony AI softness and glow. When asking for a shallow depth of field, the result tends to look unnatural like when artificially creating bokeh in camera phone apps.

The best open-source AI image generator

5. Flux AI

Our expert review:

Specifications

Reasons to buy

Reasons to avoid

Flux is a relative newcomer, and it's rapidly replaced Stable Diffusion as our pick of the best open-source AI image generator. As its open-source, you can use it for free and, with a bit of technical know-how, you can tweak the model using fine-tuning scripts.

Note that only the Dev and Schnell (fast in German) models of Flux are open source. The more powerful Pro model requires payment. Nevertheless, I've found the open-source models to be capable of producing impressively consistent results.

There are several ways to access and use the generators. If you have a powerful enough computer, you can run Flux locally on your device. Alternatively you can try it via Hugging Face or through several third-party sites, including Replicate. The Pro version is available via Freepik.

We found that Flux works well locally on Windows computers via Forge, a free version of the Automatic1111 Stable Diffusion package updated to handle Flux as well as SD models and packed into the Pinokio launcher. Just note that you'll need a decent Nvidia graphics card to run it.

The great thing about running Flux locally is that you get a lot of control, including over size, prompts, scale and steps. Once an image is generated, a second set of editing tools include inpainting. Another big advantage of running an open-source model like Flux locally is the ability to provide further training by using low-rank adaptation (LoRA) models.

These fine-tuning scripts can enable Flux to generate content it wasn't trained with (or wasn't trained well with), including specific artistic styles or specific faces (there are ethical issues here, we advise not using real people's faces without their permission). Several images created using Flux with XLabs' LoRA went viral this year because of how realistic they looked, even surpassing the kind of output we expect from Midjourney. While these were presumably the results of many hours of prompting, they demonstrate the huge (and slightly scary) potential that Flux has.

The best AI video generator

6. Runway AI

Our expert review:

Specifications

Reasons to buy

Reasons to avoid

Several promising-looking AI video generators, such as OpenAI's Sora and Meta's Movie Gen, are still in development and aren't open to public access. Meanwhile, Adobe plans to roll out its Firefly AI video model soon, and it's already added a Firefly-powered Generative Extend tool to Premiere Pro (like Generative Fill in Photoshop but to extend video footage).

But for the moment, Runway is the best AI video generator that I've tested that provide public access to a wide range of generative AI tools to generate and edit videos. It also allows users to generate images, and also to add movement to images (simply paint over an image with its beta Motion Brush, and it will turn into a motion image).

Runway has a whole range of generative AI tools, including green screen for changing or removing the background, object removal and a tool to replace sections of a video image. However, I've found that a lot of the tools in Runway are difficult to get good results from. We are talking about video here – basically a series of lots and lots of images, so all the weird artifacts that come with AI image generators are multiplied.

Motion is often glitchy and uncanny-looking. Cloud movement can look fairly convincing if you want to fake a timelapse from a still image, but other subjects are prone to AI weirdness. I found the image section replacement tool to be particularly hit-and-miss.

Despite limited usability for now, Runway is a pioneer in this area and it's quickly improving. There's a strong community and a lot of tutorial videos to help you get up to speed. The free plan doesn't go far, and a standard subscription costs $12 per month per user.

The best free basic AI image generator

7. Perchance

Our expert review:

Specifications

Reasons to buy

Reasons to avoid

We think the best basic AI image generator for those who want to learn how the tech works with no fuss and no payment is now Perchance, which uses a combination of Stable Diffusion and Meta's Llama 2. There's no need to create an account, no need to run any code and no limit to how many images you can generate. Just go to the website, type what you want to see in the text field box, and Perchance will generate up to 30 images based on your text prompt.

That's pretty impressive for an ad-supported free tool, since many AI image generators give you only four options per generation. The results don't come close to Midjourney and Flux or even DALL-E 3in terms of realism, but again, this is a completely free tool. And unlike other free AI image generators like Craiyon, Perchance alllows you to see quite a few parameters, providing some control over generations. You can choose an art style, image shape and even complete an 'anti-description' field where you can type in things that you don't want to appear in the image.

The maximum resolution is low, and there's no inpainting or outpainting and no image-to-image generator. It's not intended as a professional tool, and the developer makes no claims to be commercially safe.

The lack of realism, consistency and editing tools is no reason to write Perchance off. It's capable of turning up surprises that look quite reasonable, and the wide variety of art styles, ranging from photography from different eras to Disney characters, oil paintings and anime, makes it surprisingly diverse. That could make it an interesting springboard for testing out ideas (it also has a 'cursed photo' option that can throw up disturbing results).

The best AI image generator for text

8. Ideogram

Our expert review:

Specifications

Reasons to buy

Reasons to avoid

Ideogram is another relatively recent contender among the best AI image generators. It launched in August 2023, and hasn't become as well known as many of the others on this list, but it quickly stood out for one key aspect: text. While Stable Diffusion, DALL-E and Midjourney and Google's Imagen have all made big improvements in their ability to render accurate, legible and consistent text in images, Ideogram is in a league of its own, allowing users to specify not just a word or short phrase but entire taglines.

The results can still be hit and miss. Texts do still often have mistakes like doubled letters, but generally I've found it much more reliable at handling prompts that ask for a specific phrase to be placed on a sign, poster or other placement in an image – either within an image generated by the model itself or as an overlay on an existing image (the latter with a paid plan only). That makes it potentially useful for photographers who want to create composites adding stylised text to their own photos.

But Ideogram isn't just about text; I've found it to be fairly impressive image generator too. Photographic-style images don't look as realistic as in Midjourney or Flux, especially when it comes to relatively complex scenes, but they can be passable for illustrations when using version 2.0. If you're struggling with prompts, the Magic Prompt feature uses AI to rewrite prompts to get the best results, which is comparable to using ChatGPT to write prompts for DALL-E 3.

The free tier is not hugely generous. You only get enough daily credits to for around 10 prompts, and the download resolution is limited. You'll need to upgrade all the way to the Plus plan ($16/month) if you want to upload your own images.

How to choose the best AI image generator

There are several things to consider to choose the best AI image generator for you. They include what you want to use it for, how much time you want to spend getting set up, what kind of results you expect, whether you're prepared to pay for it and if you accept how it was trained.

If you've never used an AI image generator and want to very quickly see how they work, you can jump into Perchance immediately and experiment to see how they work. For the best balance between the ease of use and quality of results, however, we'd suggest trying Adobe Firefly or DALL-E, which are capable of producing more coherent and accurate images, including in more realistic photography styles.

Stable Diffusion and Flux are open source and can be used for free, but while running them locally provides privacy and control, it also requires a bit of tech knowhow and a powerful computer. Meanwhile, Midjourney is very impressive when it comes to generating a wide range of styles, including very convincing photorealism. It also has a strong community to learn from, but it's no longer available for free.

We think that if you want a guiding hand – and you want to be sure that nobody's copyright was infringed in the process, Adobe's Firefly is the most best AI image generator for most people interest in fully blown AI image generation. Meanwhile, Photoshop, which has generative AI tools powered by Firefly, is the most useful tool for photographers who want to use generative AI to edit images.

No AI image generators have a 100% success rate in interpreting the prompts given. The same prompt used in the same image generator will return different results each time (unless you specify the same seed value). Sometimes the results may reflect accurately what you described, at other times they may be way off. This is something to consider when using text-to-image generators that charge for credits, as you're likely to use up a certain amount of generating images that you don't like and don't go on to use.

How we tested the best AI image generators

We ran similar text prompts through each of the AI image generators asking them to produce a range of different kinds of images, from illustration to photorealism. We compared the quality of results in different styles, the reliability of each generator's interpretation of text prompts and the ease-of-use of their UI. We also tested image-to-image generating tools and editing features where they exist as well as another other parameters and editing tools.

The technology is evolving so fast that models can be improved and the features available expand from one month to the next, so we will be reassessing all the AI image generators listed regularly in order to update this guide.

AI image generators FAQs

What are AI image generators?

AI capabilities have existed in programs like Photoshop, Lightroom and Luminar Neo for a long time, but these have tended to use AI to identify parts of an image. AI image generators are a different beast. They use generative AI technology to create new imagery, or parts of an image, from scratch.

How do the best AI image generators work?

AI image generators are based on machine-learning models that have been trained on vast datasets of millions of images and captions to recognise the relationship between images and text. You type in a short text prompt describing what you want to create, and the AI model will attempt to create that image based on the images and captions it's been trained on.

Today's best AI image generators use diffusion models. They start out from random dots and begin modifying that noise to move towards the final output as they recognise aspects of the image. In some generators, you can choose how many steps you want the model to take, which will influence how long it takes to generate an image.

How do I get the best results from an AI image generator?

Even the best AI image generators can produce terrible results. By nature there's an element of haphazardness to it, since using the same prompt that resulted in a great image one time, won't necessarily give you the same image when you use it again.

Generally, the more information in the prompt the better. A lack of detail tends to produce unimpressive results, while mentioning things like the style of photography and even a brand and model of camera and the focal length of a lens can lead to better results if you're aiming for photorealism. Some people have reported getting great results from DALL-E 2 by using 'Graflex' in prompts.

Finally, even the best AI image generators have many quirks and produce images with strange artefacts you'll want to fix in traditional image editing software. Human figures are particularly prone to contortions, although problems can often be corrected in Photoshop. Another drawback with AI-generated images can be their resolution, particularly if you want to use them at a large size. Some AI image generators have their own upscaling tools, while with others you will need to use other software such as Topaz Gigapixel AI.

Why are the best AI image generators controversial?

There are several reasons that the best AI image generators are causing controversy. One of the main issues is the fear of misuse to create violent, abusive or pornographic content, and also the fear that people may try to pass off images generated by AI as real, spreading fake news or defaming people.

There are also big questions about copyright, both whether someone can own the copyright to an image they created using AI and whether it was legal to train AI models on images from trawled from the web without the consent of their original creators. Finally, some people have concerns about what they might mean for the future of jobs in some creative sectors.

What are inpainting and outpainting in AI image generators?

These are two types of AI-powered editing that can allow you to modify either your own images or images generated by AI. Inpainting allows you to paint over part of an image and have the AI generate something else in its place. Outpaining allows images to be "uncropped", expanding the picture beyond the original frame (like Generative Expand in Photoshop) The latter can be useful for photographers who cropped an image too far or didn't have a wide enough lens to capture the ideal composition.

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

Joe is a regular freelance journalist and editor at our sister publication Creative Bloq, where he writes news, features and buying guides on a range of subjects including AI image generation, logo design and new technology. A writer and translator, he worked as a project manager for at Buenos Aires-based design and branding agency Hermana Creatives, where he oversaw photography and video production projects in the hospitality sector. When he isn't learning exploring new developments in AI image creation, he's out snapping wildlife and landscapes with a Canon EOS R5 or dancing tango.