AI image generators are the most radical new development we've seen in the visual arts for some time – perhaps since the advent of digital photography. The technology has advanced massively in the last year, and AI-generated images are now everywhere online and starting to appear in commercial use. The impact is being felt everywhere from illustration to graphic design, and many are asking what AI imaging means for photography.

For some, AI image generators are a form of alchemy that unlocks all kinds of new creative possibilities. For others, they're equivalent to theft and a threat to jobs in the creative sectors. The one thing that seems undeniable is that anyone who works in image creation needs to know about them.

This article aims to bring you up to speed. We'll look at some of the most frequent questions about the best AI image generators, from how they work to why they're so controversial, why photographers might want to use them (and why they might not), and what they might mean for the future of photography.

What is an AI image generator?

Artificial intelligence is transforming and speeding up processes in all kinds of sectors, from the search for new drugs to computer programming. Many photographers will have used the AI capabilities of software like Photoshop CC and Luminar Neo for routine editing work, using tools that can detect certain parts of an image and adjust or remove objects.

Text-to-image AI image generators use generative AI. Rather than merely analyzing and editing parts of existing content, they can generate entirely new content based on the images they've been trained with. They're trained on datasets containing billions of images and captions, enabling them to learn the relationship between text and pictures. The result is programs that can generate incredibly accurate images from text prompts.

How do AI image generators work?

AI image generators have been trained on combinations of existing images and captions, including photography, paintings, illustrations – really any image found online. They learn to identify what kinds of pictures match certain phrases, be it 'in the style of Picasso' or '50mm portrait photography', allowing them to create new and unique combinations of visual elements when given a text prompt.

The technology behind them varies, but the most recent AI image generators use what are known as diffusion models. These work by destroying their training data through the addition of Gaussian noise, and then reversing the process to remove noise from the image. The model applies this process to random seeds to generate images.

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

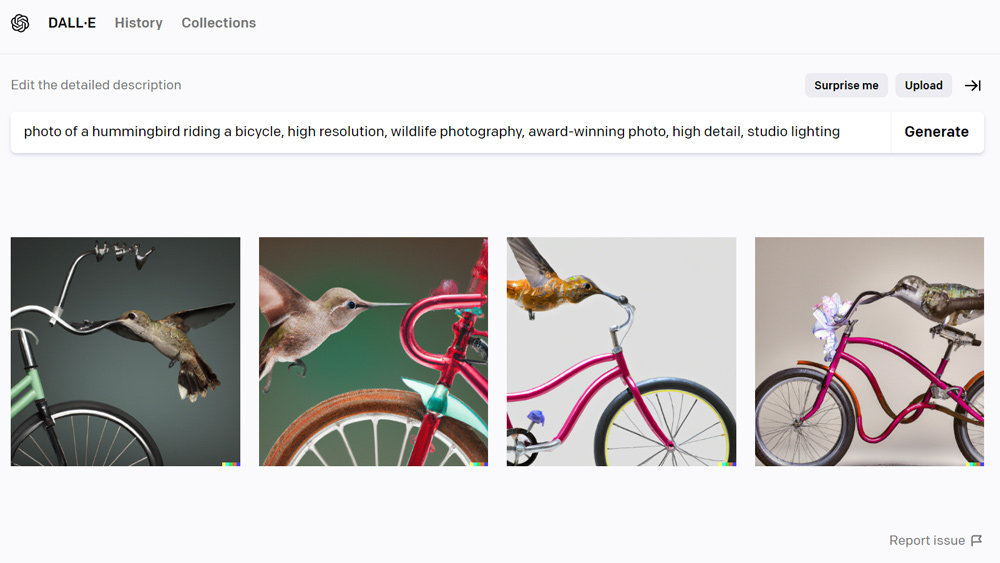

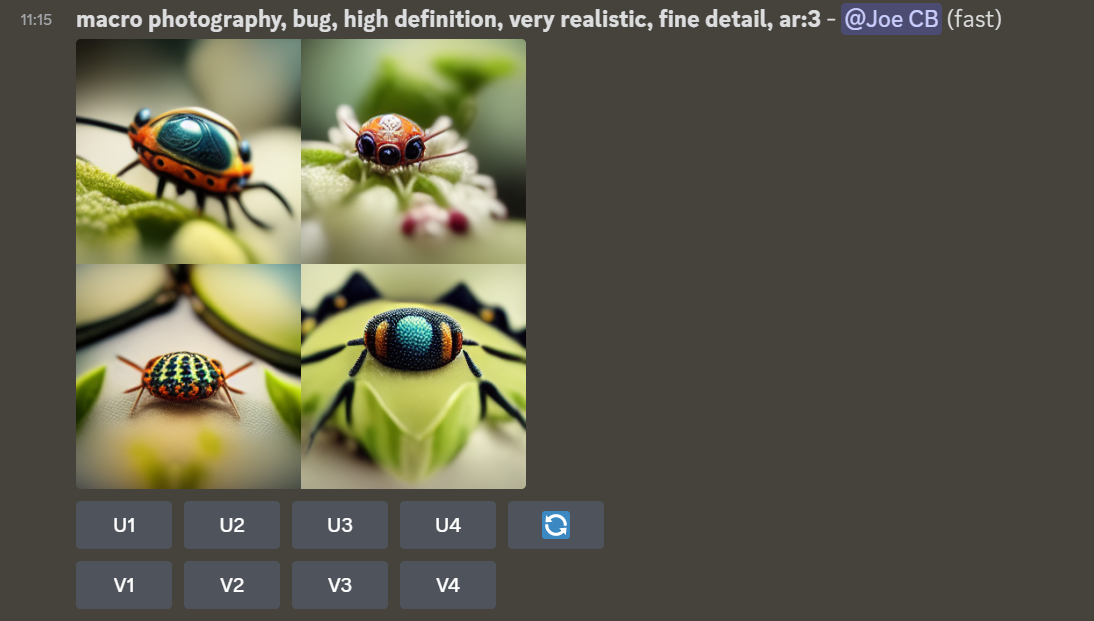

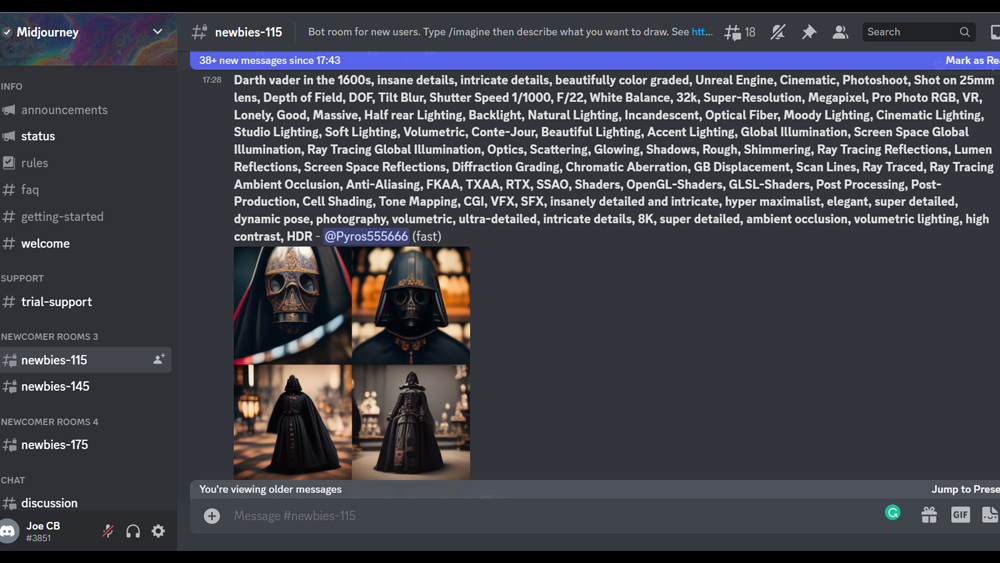

That might sound complicated, but to use most AI image generators, you simply type in a text prompt, wait, and the model will generate four images that it thinks match your description. Some models allow you to change certain parameters like how many images you want to create, how many steps, the size of the canvas, which will input how long it takes to generate images.

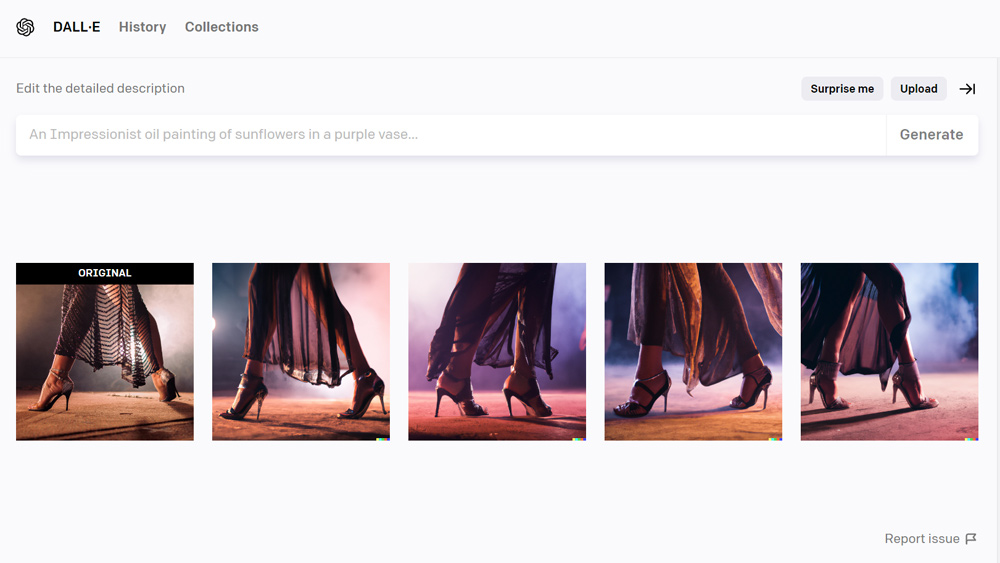

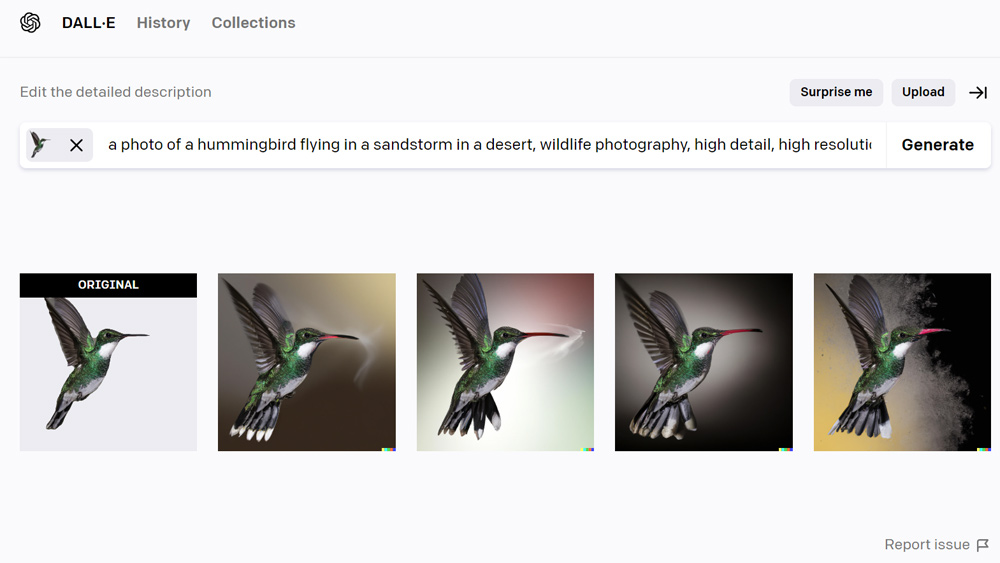

Many AI image generators also have image-to-image editing tools. These allow users to upload an existing image and add a text prompt to generate variations. A process known as inpainting allows parts of a composition to be removed and replaced by new visuals created by AI.

Which are the most popular AI image generators?

The main players involved in the explosion of AI image generators are OpenAI's DALL-E 2, Stability AI's Stable Diffusion and Midjourney. All three have their own browser-based image generators and also have APIs that developers can include in their own apps to create new image generators with specific focuses. Tech giants like Google, Meta (the owner of Facebook) and Microsoft are also working on text-to-image generators, but access remains restricted.

How easy is it to use AI image generators?

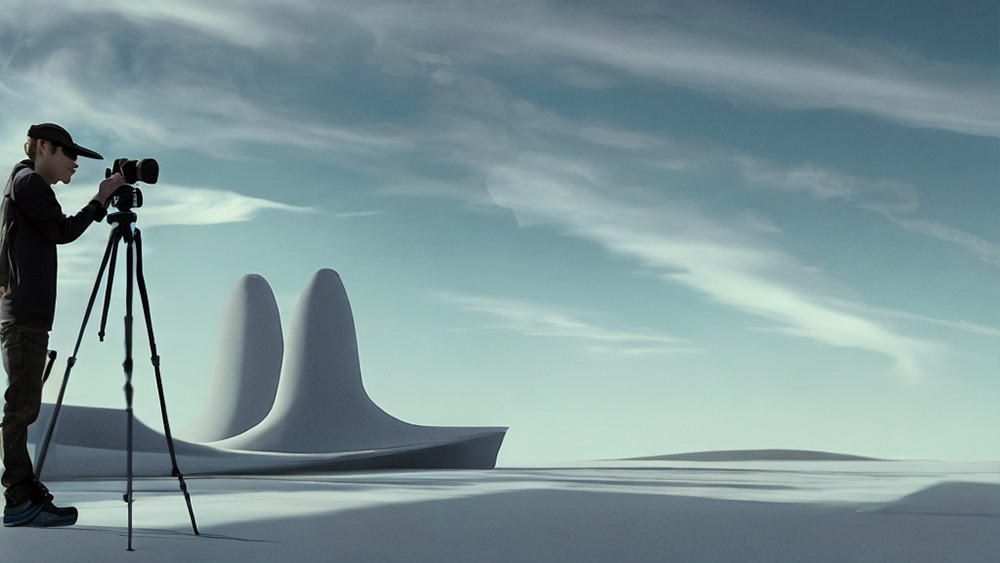

One of the things that makes AI image generators so powerful is just how easy they are to use. At the moment, most of the tools are browser-based and don't have much in the way of settings to tweak. Once you have an account, you can go to the website, type in a text prompt and generate images. In theory, anyone can type in "a vase of flowers in the style of Van Gogh" or "a photographer taking a selfie in a desolate futuristic landscape' and get something that at least vaguely resembles that with no need to learn any particular skills.

I say in theory, because although AI image generators are incredibly easy to use, and can be great fun if you're happy to let them surprise you, getting good results can require a lot of trial and error, potentially trying hundreds of prompts and variations. It could take so long trying, that it might make more sense to... well, take a photo.

Since so much depends of the prompt, we've seen the sudden apparition of 'AI prompt engineer' as a real job description. There are even online prompt marketplaces, such as PromptBase, which sell phrases to create specific kinds of image in particular AI image generators.

How do people get the best results from AI image generators?

Generally the more detailed the prompt, the better the results. Although it depends on what the AI model has been trained on, words like 'masterpiece', 'ultrarealistic', 'art photography', 'UHD' and 'Kodak' are said to work well. Specifying focal lengths, camera models or even the name of stock photography sites also seems to help when aiming for photorealistic results.

Some AI image generators, such as Stable Diffusion, let you set certain parameters when you generate an image. Choosing the maximum number of generations and a higher number of steps is more likely to turn up something presentable, but the process will take longer.

What are the limitations of AI image generators?

AI image generators create output based on the images they've been trained on, but they can't make value judgements on what looks good, realistic or even possible. They don't know how many fingers a human should have or how many legs a tripod should have. Less successful results often illustrate that the model knows what objects are associated with certain phrases, but it might not put them in the right order.

Sometimes AI-generated images can trick us at first glance, but look closer and you find strange artefacts that would need to be corrected in traditional editing programs. Human figures can be particularly problematic. Even models that can achieve photorealistic output, like DALL-E 2 and Stable Diffusion, often turn out human figures with six fingers on each hand or a leg coming out of a shoulder.

There are also limitations in the sizes of images that can be generated. Most AI models can currently only generate square images. Stable Diffusion can do other aspect ratios, but it sometimes ends up generating repetitions to fill that space, giving you two of whatever you asked for.

For resolution, Stable Diffusion can go up to 2084x2084; still not really good enough to print at a large size. These limitations mean that many people working with AI image generators import the results into other software for editing, retouching and upscaling.

More conceptually, while some see AI image generators as useful springboards for ideas, others point out that they can’t create images of anything they haven't already seen. That means that, unlike humans, they're not capable of creating anything truly original.

Why are AI image generators controversial?

AI image generators are controversial for a range of ethical and legal concerns. Many models were trained on billions of images trawled from the web without permission from the owners, who are not credited or compensated when the AI uses their work Some argue that this is not only copyright theft but could also jeopardise the original artist or photographer's future work if the model learns to replicate their style.

The ability to create photorealistic images of practically anything raises the same concerns about privacy and authenticity that surround the related technology behind deepfakes. If AI images get good enough, how will we be able to tell what's real and what's been created using a machine?

Generative AI is advancing so fast that it’s outpacing our ability to foresee future risks, but there are concerns that the technology could be used for fraud, to spread fake news and to create abusive content and pornography. AI image generators can also reflect the biases of the material that they're trained on, whether that's a tendency to generate images of people of a particular race or to sexualise images of women. There's also that nagging fear affecting many industries that AI could put people out of work.

What do AI image generators mean for photography?

We don't see AI image generators killing the camera, but they may change photography in several ways. There will always going to be a need for original imagery for everything from documentary to product photography, even if you use AI image generators to create part of the final scene. But there are situations in which the use of a real scene or real person may not be necessary.

Stock imagery may be one of the first areas to feel the impact of AI-generated 'photos'. There's no need for model release forms when the human beings aren't real, so some think AI-generated images could take off in lifestyle stock imagery, which could see 'prompt engineers' competing with photographers in the sector. The same could apply for imagery for thing like book covers.

Getty Images has banned AI-generated content for now, but Shutterstock has done a deal with OpenAI to incorporate DALL-E 2 into its site and Adobe Stock has published guidelines allowing the submission of AI imagery.

Other types of software and even cameras themselves are likely to make more and more use of AI to make smarter decisions, for example selecting when to take a shot so a subject's eyes aren't closed. Many photographers may find that text-to-image generators also have a place in their workflows.

Antti Karppinen is a photographer and digital artist who has already used generative AI for commercial projects, for example to place models in different settings after taking photographs of them and using the images to train the AI. He says he's keeping an open mind and exploring how AI image generators could create opportunities for his work.

Re-imagining my own images with Artificial intelligencehttps://t.co/1Efn9Ltgc4 #ai #aiartist #midjourney pic.twitter.com/5YcJNh6qTNNovember 9, 2022

"I think we are in the similar place when cameras came and the traditional painters were arguing that photography is not art. Or when digital cameras came and the film shooters were saying that it is not real photography," he says. "Photography will always be there, but AI is definitely going to play a big role in the future when we create pictures. Change is always frightening to some, but I will always try to adapt.

Karppinen agrees that photography will always be needed, but he also recognises that AI image generators are changing how we create images. And we don't yet know exactly where the path will take us. We also don't know how the public will react. People may tire of not knowing whether an image can be trusted. Today, publicity in many countries must carry disclaimers with variations of phrases like "the human figure has been digitally altered". If they soon start to say "the human figure does not exist," authenticity could become more highly prized.

Read more:

Joe is a regular freelance journalist and editor at our sister publication Creative Bloq, where he writes news, features and buying guides on a range of subjects including AI image generation, logo design and new technology. A writer and translator, he worked as a project manager for at Buenos Aires-based design and branding agency Hermana Creatives, where he oversaw photography and video production projects in the hospitality sector. When he isn't learning exploring new developments in AI image creation, he's out snapping wildlife and landscapes with a Canon EOS R5 or dancing tango.